Hello,

I'm using the llpm sample code on nrf52840.

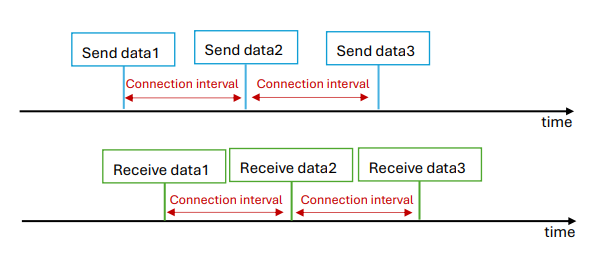

I'm confused about the connection interval.

From this post, I thought that the connection interval is equivalent to the shortest time interval for transmitting data, that is, after the first data packet is transmitted, the second data packet can be transmitted after waiting for the connection interval time.

Also, when I use non-llpm ble code, I can also change the minimum interval for transmitting data by modifying CONFIG_BT_PERIPHERAL_PREF_MIN_INT, and the description of CONFIG_BT_PERIPHERAL_PREF_MIN_INT is also related to the connection interval.

But I modified the following part in the llpm code to detect the time interval for receiving data, and found that when the connection interval is 1ms, the shortest interval for receiving data is still 7.5ms.

static void test_run(void)

{

...

/* Start sending the timestamp to its peer */

while (default_conn) {

...

//k_sleep(K_MSEC(200)); /* Don't wait */

...

}

}

void latency_request(const void *buf, uint16_t len)

{

uint32_t time = k_cycle_get_32();

uint8_t value[len];

memcpy(value,buf,len);

printk("Recived Data time:%u ms\n",(k_cyc_to_ns_near32(time)/1000000));

}

int main(void)

{

int err;

...

static const struct bt_latency_cb data_callbacks =

{

.latency_request = latency_request,

};

//err = bt_latency_init(&latency, NULL);

err = bt_latency_init(&latency, &data_callbacks);

if (err) {

printk("Latency service initialization failed (err %d)\n", err);

return 0;

}

...

}

Now I am confused about what the connection interval means? Is it related to the interval of transmitting data?