Hi!

I'm having a little trouble with my measurements. A little situation sketch:

I'm using two nRF51822 DK's who act as peripheral and connect to a nRF51 Dongle that acts as central. The peripherals use application code that continuously changes (increments) a certain characteristic and sends notifications about that to the central. Because of that, the peripheral each send the max. number of packets per interval that the central allows to the central (in this case 3, cause this is what the newest firmware for the Dongle allows as max.), each packet containing a maximum of 20 bytes user payload of course. Both peripherals use HIGH BW configuration (both TX and RX), or in other words, the default setting.

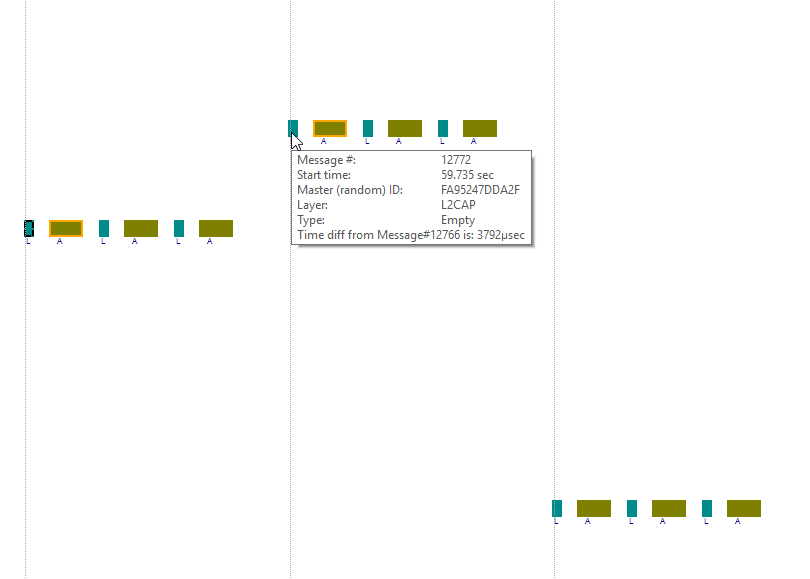

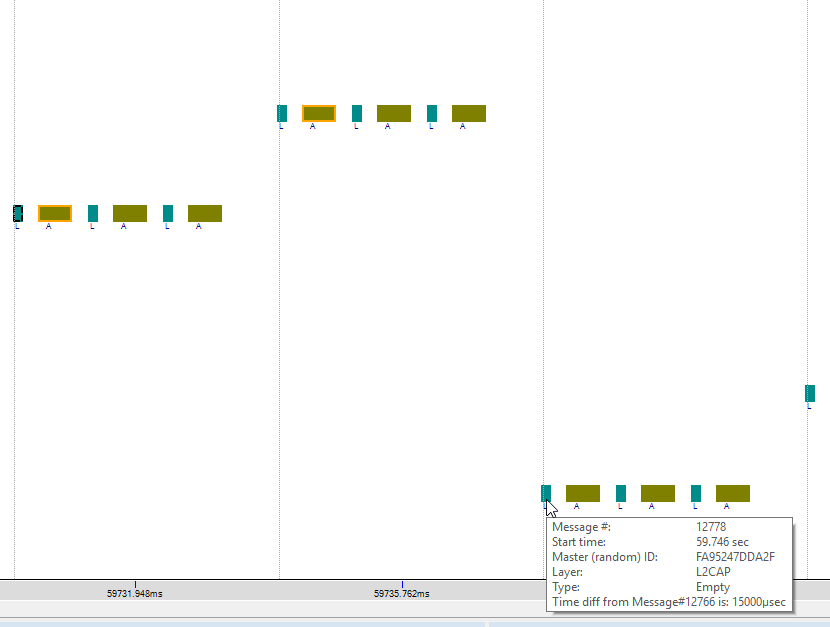

The goal of this is to sniff the packet flow and prove that the provisioning of connection events happens according to the specs of the SoftDevice (s130 v2). According to those specs, I made some calculations. The tEEO should be 6.9 ms for both peripheral connection events because we are using HIGH BW configuration for both. So the minimal time between two events, each of another peripheral, should be 6.9 ms. This gives 6.9 + 6.9 = 13.8 ms as minimum boundary for the connection interval. This gives 15 ms as minimal connection interval. Using this interval, the situation was implemented. After both connections are established and the notifications from both peripherals are being send, the sniffer shows something like the figures below:

Now my question is: How is it that the time between the C0 and C1 event is 3.792 ms, which is far less then the theoretical minimum of 6.9 ms? The second figure is there to give a complete picture, showing that the next C0 event is indeed 15 ms away.

Thanks for your help in advance.

Gr

Mathias