Hi,

I am developing both Central and Peripheral devices with nRF52840. For consistency, both are using SDK 15.3.0 and S140 V7.

I am able to connect to peripherals and negotiate MTU ATT size of 247 and I am able to negotiate 2 Mbps phy. Logging on both peripheral and central devices confirm this is working.

My challenge is getting notification data from my peripherals to my central. If I set up (for example) 2 peripherals to notify my central device every 500 mSec with approx 160 bytes of data, all data comes into the BLE event handler (BLE_GATTC_EVT_HVX) within my central device just fine. If I double the data speed on peripherals (ie. 160 bytes every 250 mSec from each of the 2 devices), I only get BLE_GATTC_EVT_HVX events notifying me of incoming data from the first peripheral only.

I believe that I am not getting correct connection service intervals setup after peripheral connection.

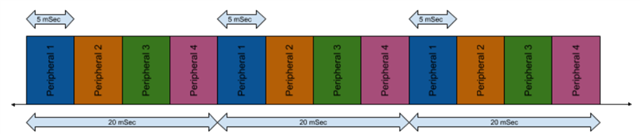

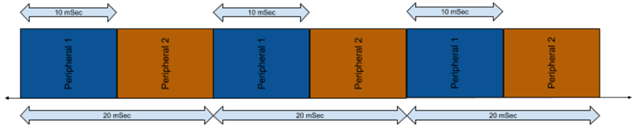

For a scenario where a central is talking to 10 peripherals and getting notification data from each every 250 mSec, what would good connection interval, slave latency values be? I cannot seem to find a good reference for setup of connection intervals for multiple simultaneous peripheral connections to a central device - where each peripheral is notifying the central independently.

Note that my connection event length is 6 x 1.25 mSec or 7.5 mSec. This should be plenty long enough to get an MTU of 247 bytes transferred.

I have been using default min and max connection intervals of 7.5 and 30 mSec respectively and a slave latency of 0 (for both central and peripheral devices).

Suggestions for my scenario would be much appreciated.

Thanks in advance,

Mark J