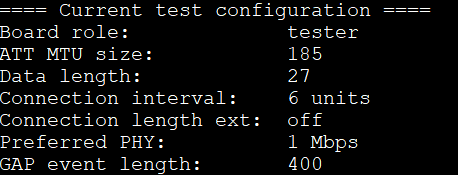

Let me first show what parameters I am choosing for calculating the data rates :

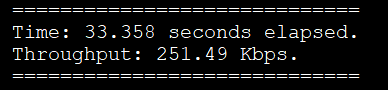

Conn interval is 7.5ms and connection length ext is off meaning it wont extend the connection interval from 7.5ms. After I 'run' the test I get

HOWEVER,

If you theoretically calculate the throughput of continuously streaming data with connection interval 7.5ms, 1M PHY, 27byte data length (meaning 41byte LL payload) and ATT MTU as 185bytes, you should get ~194Kbps. Is there some overhead that the firmware is not accounting for like cumulative time taken for printing all that out to terminal and all ? But still there shouldn't be around 60Kbps. Can you please explain what's wrong here ?

PS: I can provide the calculation if required.