Hello,

whilst implementing my embedded device software, I'm developing a tool running on a Windows laptop to validate my work.

The idea is to use pc-ble-driver-py python bindings to implement simple interactions with the device over BLE using the services exposed.

The current setup is:

- pc-ble-driver-py 0.15.0 (latest)

- nRF52840 Dongle (PCA10059) running connectivity FW 4.1.2 SD API v5 (latest supported)

Most of the functionalities seem to be working fine.

But when I try to collect notifications from a certain rate on, looks like the system is not able to "catch-up".

To give some context - from the embedded device, I'm polling data and queuing notifications on certain char every 10ms. The connection interval is set 15ms.

The moment I enable the notifications on this char from Client side(after the wanted connection intervals are updated), I start receiving as a return value from sd_ble_gatts_hvx the error code NRF_ERROR_RESOURCES on the server side - it's not always the case, but very often, at least 10/20 times per second.

The only explanation I can give to this is that the Central (i.e. the python script) is not meeting all the connection events at fixed interval (15ms), and this way the notification queue on the Server is increasing till its limit.

I tested this scenario with different platforms (Windows UWP, Android) and this is the only case I'm seeing this behaviour.

What I find rather odd is that using exactly the same dongle (with same FW), but from the nRF Connect Desktop App GUI this is NOT happening - I can subscribe to the notifications and getting them at the rate I expect.

Adding a couple of graph to make things clearer:

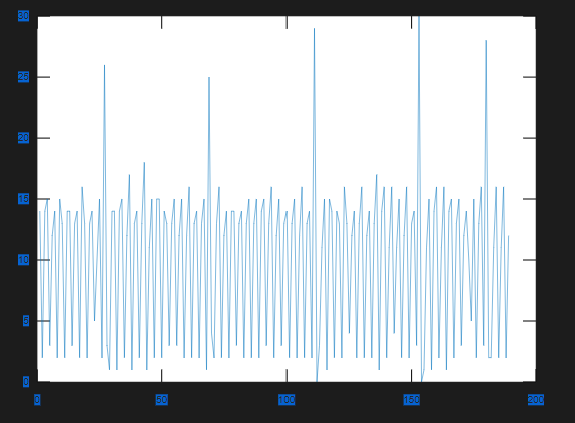

Notifications Intervals (ms) from nRF Connect Desktop:

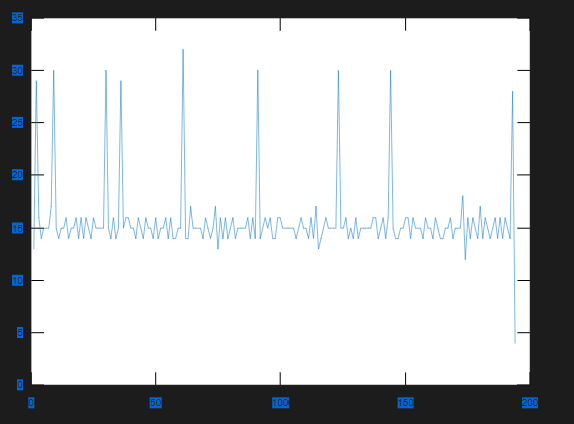

Notifications Intervals (ms) from pc-ble-driver-py:

It's clear that in the first case, two notifications are sometimes very close as the queuing rate is higher than the connection interval (i.e you get more than one notification for conn interval).

Instead in the second case, interval is never below 15ms, meaning that for sure notifications are lost (I imagine due to missed connection events...).

I tried to do the same but changing the dongle with a nRF52-DK. The result is that in this case the system crashes and disconnects after a couple of seconds from the enabling of notifications with this log:

As a quick workaround, If I try to queue notifications every 20ms (or anything above the connection interval), I don't see any problem and the system works flawlessly.

Can you help me understanding what's going on?

I'm evaluating this python libraries for the system testing framework of our devices - and of course we'll need to evaluate also the high-rate notifications.

I think reproducing the issue would be quite trivial, but maybe there is something I'm missing that can solve this straightaway.

Thanks!