I'm using the UDP sample from SDK 1.8.0 with the following modifications:

- No PSM

- No eDRX

- Send 100 bytes every 15 seconds

This is on the nrf9160-DK board.

I suspect I can improve power consumption by disabling RRC idle mode, but I cannot find any documentation on how to do this.

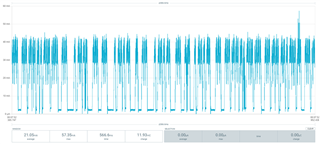

I am unsure of the actual cDRX timing parameters as what seems like DRX events are sporadic.

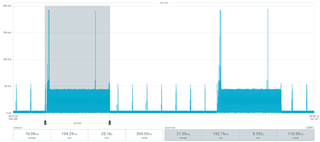

Here are some power profile images and a modem trace if that's helpful.

The above image shows large period of almost continuous activity after the TX event for ~5 seconds and then iDRX activity until the next TX event.

This is the activity while RRC connected.

I am seeing an average of 10mA, with 22mA during the connection period. I was expecting the average current consumption to be 2.5 - 4mA.