Hi,

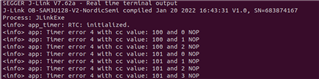

I've encountered problem with app timer functions being called from different interrupt levels. Looks like no memory error returned is caused by collision in underlaying atomic fifo library. This seems like unexpected behaviour as there is no information in documentation about such a behaviour.

Setup:

nRF5_SDK_17.1.0

gcc-arm-none-eabi-10-2020-q4-major (tested both windows and IOS)

gcc-arm-none-eabi-9-2019-q4-major (tested on IOS)

nRF52840_XXAA

App timer v2

no rtos

Tomasz