Using the HID sample in Zephyr Project, I modified the report descriptor to create a joystick. All is well except one thing, the axes are only acknowledged from -127 to 127 in Windows, despite the actual values being higher.

I though that maybe this was just a superficial issue with how windows displays axes greater than 8-bits, but when I tested a flight joystick and xbox controller, both managed to display perfectly nominal 16-bit ranges.

Here is my code:

static struct report {

uint8_t id;

uint16_t RxValue;

uint16_t RyValue;

uint16_t RzValue;

} __packed report_1 = {

.id = REPORT_ID_1,

.RxValue = 1,

.RyValue = 0,

.RzValue = 0,

};

static const uint8_t hid_report_desc[] = {

HID_USAGE_PAGE(HID_USAGE_GEN_DESKTOP),

HID_USAGE(HID_USAGE_GEN_DESKTOP_JOYSTICK),

HID_COLLECTION(HID_COLLECTION_APPLICATION),

HID_REPORT_ID(REPORT_ID_1),

// Rotational Axes

HID_USAGE_PAGE(0x01),

HID_USAGE(0x33),

HID_USAGE(0x34),

HID_USAGE(0x35),

HID_LOGICAL_MIN8(0x00),

HID_LOGICAL_MAX16(0xFF, 0xFF),

HID_REPORT_SIZE(16),

HID_REPORT_COUNT(3),

HID_INPUT(0x02),

HID_END_COLLECTION,

};

I have also tried every possibility of signed + unsigned integers.

In the main, I increment the value of the axes every cycle (50ms), and when I view the raw values, they are in fact 16-bit, when they reach 255, they do not flip back to 0, they continue all the way to 65,535 and THEN flip.

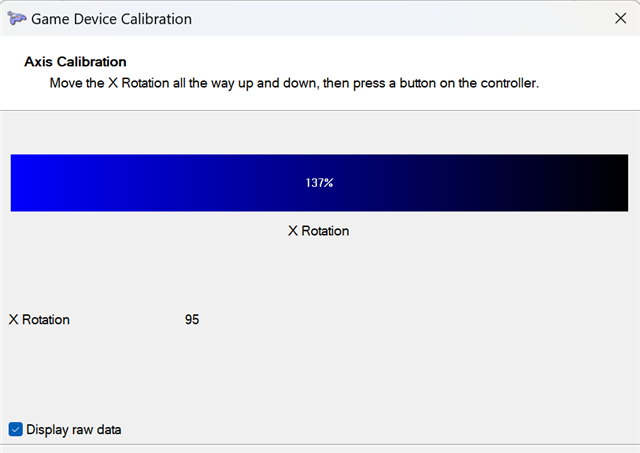

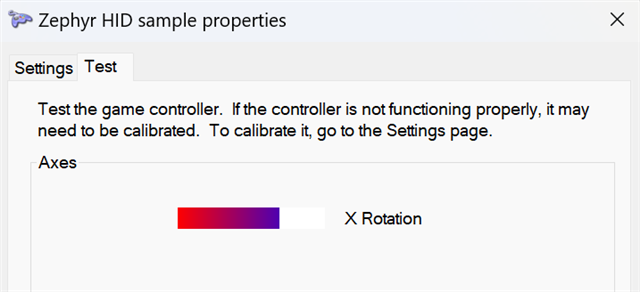

In the case of this picture, you can see how it thinks that the value 95 is 137% of the max, and yet the next picture was taken around the same time, and clearly shows 95 is well with the maximum value of 127, so the axis range is clearly confused.

So how can I have 16-bit axis ranges?