Hello,

We are using a nRF5340 at 3.3V to drive a RGB LED (common anode) on our system. Our PCB has very little space, so we cannot use external MOSFETs (we have to use the GPIO pins of the nRF5340 as low side switches for the LED). We have additionally three TWI communication interfaces (two over standard pins one over the the TWI dedicated pins) with 4.7k external pull-up on each signal line, which would result in a little more than 4 mA if all lines were pulled down by the TWI interface in worst case.

Recommended operating current for the LED is 10 mA for red and 5 mA for green and blue.

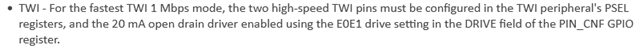

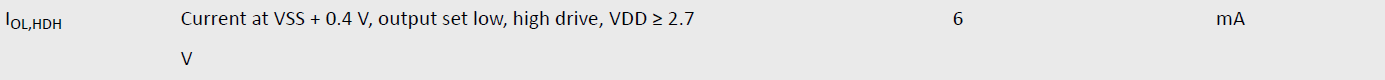

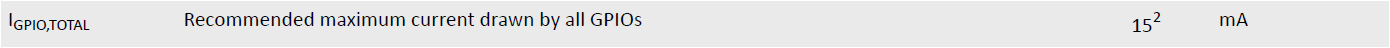

The product specification says:

If I would drive all LED channels with maximum brightness, I would sink 20...25 mA (including the TWI channels) into the nRF5340. Could this already impede stable operation of the nRF5340? Would it damage the SoC? I'm not sure what happens if we exceed the "recommended maximum current". Is it less critical if the current is sunk into the nRF5340 instead of drawn from it?

If it is a problem that we exceed the specified value, may the 15 mA be interpreted as an average current or are they even valid for peak current? (e.g. if I drive the LED in white with PWM where all three channels are synchronous, I sink 10 mA (plus TWI) in average, but 20 mA (plus TWI) peak at 50% duty cycle.

One more question, the product specification says the output voltage is between VSS and VSS+0.4V. If my green LED has a forward voltage of 2.7V, I have 0.6V left to "burn" outside of the LED. What voltage should I use to calculate the resistor for the LED? Using VSS would result in burning 0.6V at 5 mA, but using VSS+0.4V would result in burning only 0.2V at 5 mA, which would be a third of the previous resistor value...

Sorry for asking this question again, there were already some questing regarding GPIO current, but I couldn't find the details I'm looking for in them.

Best regards,

Michael