Hi All,

I have an application where I want to send small amounts of data (~25 byte payload) very rapidly at 1000 times per second. Because I am using the data in as close to real time as possible, I do not want to buffer samples and send them out in groups. I want to read a sample, and then immediately send it out.

Because of this, I am not that concerned about achieving maximum throughput efficiency. But more how many distinct unbuffered samples can I get out per second.

I was looking through the Softdevice documentation for the 52840 and focused on the "BLE Data throughput" page. https://infocenter.nordicsemi.com/index.jsp?topic=%2Fstruct_nrf52%2Fstruct%2Fs140.html

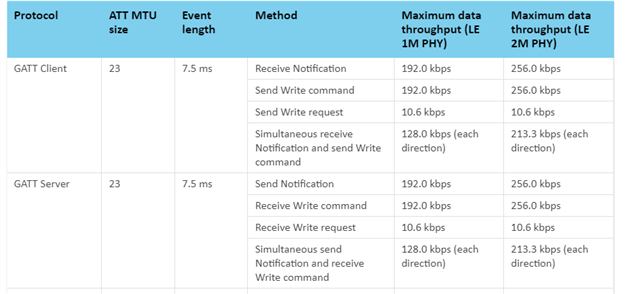

The top table sees to show that a GATT server or client that has one connection and only performing one read or write operation can achieve a maximum data throughput of 256,000 bits per second. This is similar to what my application would be. One connection between a central and peripheral with the peripheral streaming small data to the central. My payload would be pretty close to the 23 bytes shown in the table.

Using the equation above the chart:

Throughput_bps = num_packets * (ATT_MTU - 3) * 8 / seconds

I calculate the theoretical max of "packets per second" as 256,000 / (20*8) = 1600 packets per second.

I realize this is theoretical maxes and not what I will see in real world applications. But I just wanted to verify that I am interpreting this correctly when calculating the theoretical number of packets per second I could send. Do these numbers from the softdevice take into account the hardware? Like time needed to switch the radio on and start transmitting? Or are these truly the theoretical maxes if the hardware and software is in a perfect environment. Once again I am more concerned about how many individual packets I can get across per second not necessarily the data throughput.

Thank you for any help!