Hello!

I am currently running into throughput problems while using the Nordic Uart Service (NUS).

I am building a project for a peripheral and wanted to test the possible throughput by using the central_uart example. I modified this example slightly in order to add updates for BLE connection parameters as well as Data Length. I have callbacks for these connection parameters in both my peripheral project as well as in the central project and also added the needed CONFIG_XXX parameters to the respective prj.conf files. After connection, both devices show the following log, so I assume this has worked correctly:

<inf> BLE: MTU exchange successful <inf> BLE: New MTU: 244 bytes <inf> BLE: Data length updated. Length 251/251 bytes, time 2120/2120 us <inf> BLE: Connection parameters updated. interval 320, latency 0, timeout 400

I chose these parameters after trying the BLE Throughput example. With this Throughput example, the config listed as above and 1M PHY, I have a data rate of 783 kbps, which is great.

However, if I try to send BLE messages of 244 bytes with my Central/Peripheral setup, I encounter the following problem:

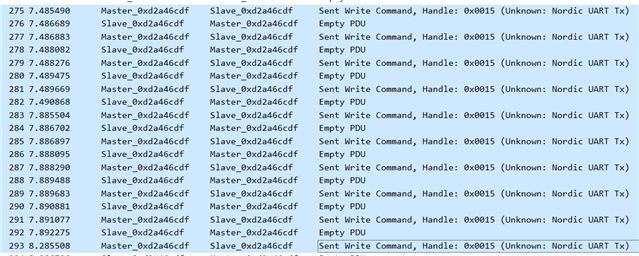

Calling bt_nus_client_send() on the client side, which in turn calls bt_gatt_write() and waits for the passed callback function, there is a large delay of ~800msecs between the call of the write function and the execution of the callback function. This slows down the data rate to an unusable 330 bytes/sec..

On the peripheral side I just have my bt_nus_cb receive callback, where I only copy the received data. This should not be a performance limiting factor in my mind.

I know that the Throughput example uses bt_gatt_write_withous_response() while the nus_client uses bt_gatt_write() which, if I'm correct, has an acknowledge in the link layer included. But I don't think that this would cause this huge delay...

So my question is: am I missing something here? Do you have any suggestions as to what I need to change in order to increase the data rate? The throughput example shows me that it should be possible, so I guess with the NUS service it should be possible as well..?

Any help is appreciated!