Hello,

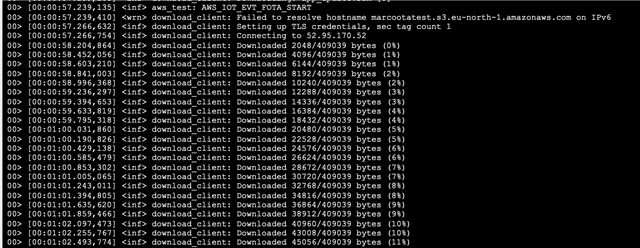

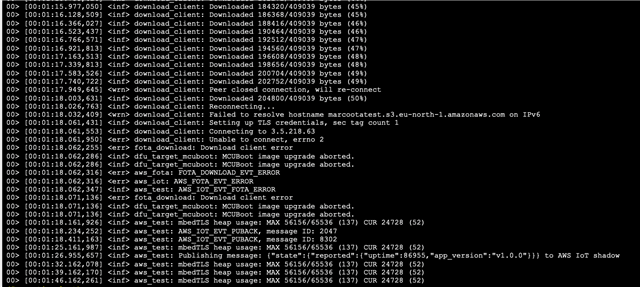

I am using the AWS IoT library and I came across an issue when downloading a new image (FOTA) from AWS. The FOTA process starts so the image is downloaded up to 50% where apparently the peer closes the connection (received length is 0) and it the download client can't reconnect to the server. The overall FOTA process then fails.

My board is the nrf52840DK with the Ethernet W5500 Arduino shield on top. MQTT pub and sub works fine (also with device shadow). I using nRF SDK 2.6.0.

I attach here my prj.conf file 1072.prj.conf

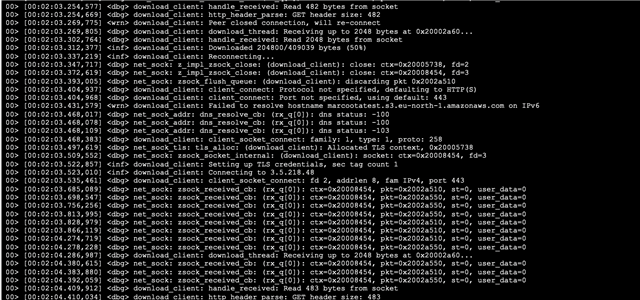

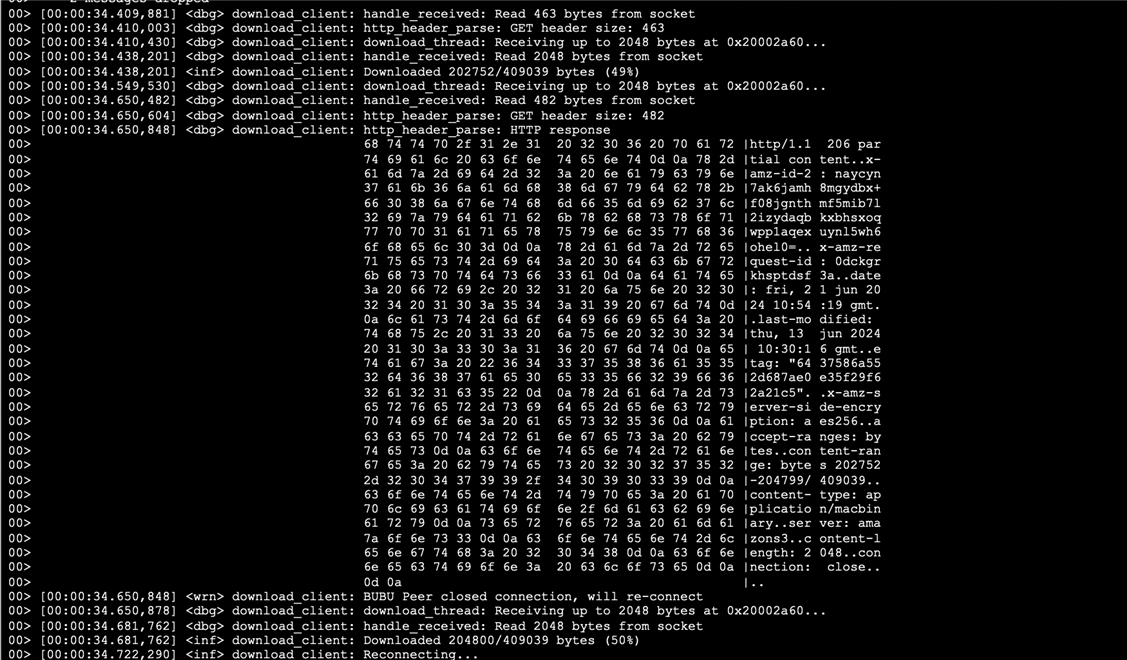

Here is the error:

More details: as you can see from the prj.conf file, I am using the Zephyr built-in mbedTLS and all communications are with TLS on (MQTTS and HTTPS).

As you see from the log at around 50% the download client prints "Peer closed connection, will reconnect" and then fails to reconnect throwing a errno 2 (No such file or directory).

Looking at the download client source code, the "Peer closed connection" error is when a received packet has length 0.

It always fails in the same way. No idea why,

Please help.

Thank you.

Marco