I am working on a project where we are sending data to a remote device as fast as possible. The sender will call bt_gatt_write() until it blocks the thread. So it is just keeping the queues loaded up with packets. Generally, what I see is that the BLE stack will cram as many packets into the connection event as possible; however, sometimes especially with multiple connections it will choose to only send a couple. This drastically reduces throughput.

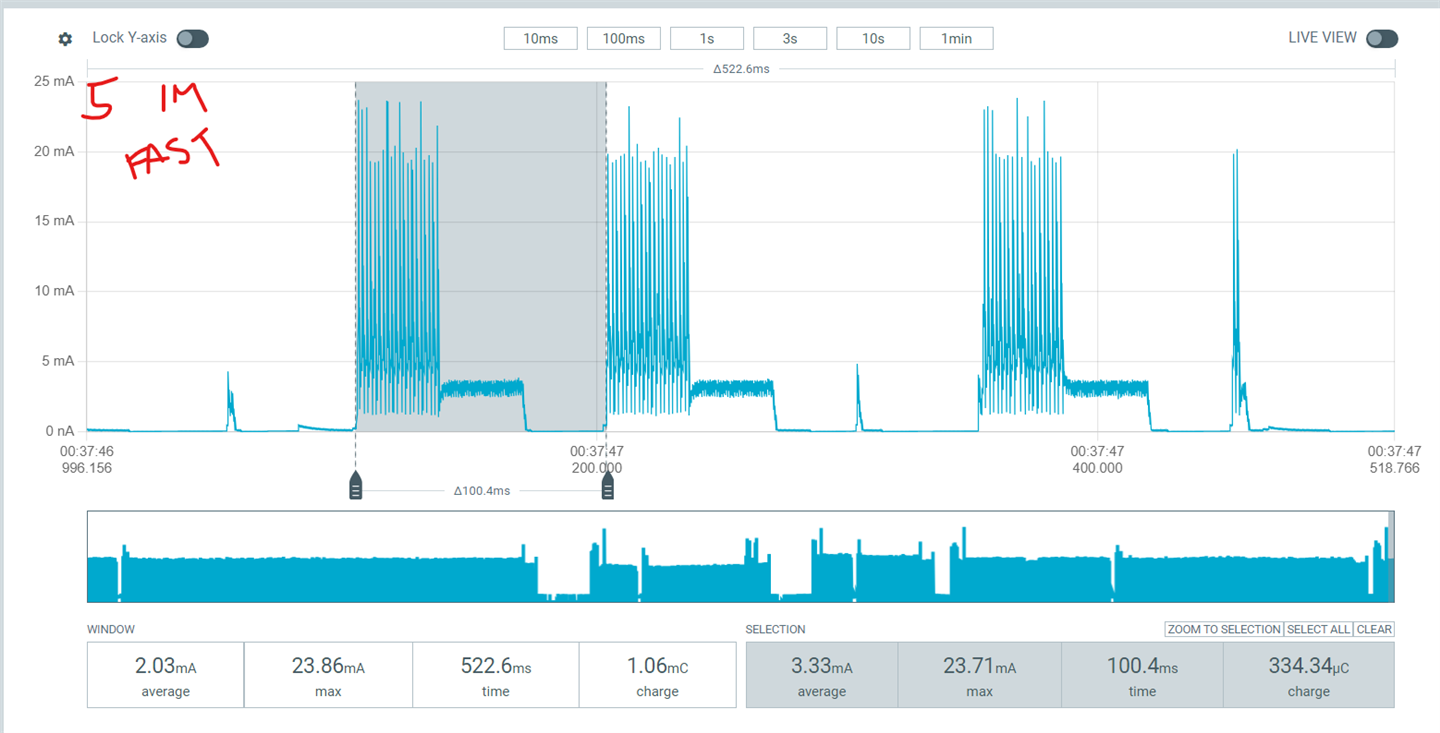

In one instance, when we had 5 connections active, the time to send ~330kB varied from 45 seconds to almost 5 minutes. I captured these images from a power profiler connected to the remote device receiving the data:

The remote peripherals are nRF52840s and have the following parameters:

CONFIG_BT_PERIPHERAL_PREF_LATENCY=0

CONFIG_BT_PERIPHERAL_PREF_MAX_INT=40 //50ms

CONFIG_BT_PERIPHERAL_PREF_MIN_INT=24 //30ms

CONFIG_BT_PERIPHERAL_PREF_TIMEOUT=600

CONFIG_BT_L2CAP_TX_MTU=77

CONFIG_BT_BUF_ACL_TX_SIZE=81

CONFIG_BT_BUF_ACL_RX_SIZE=81

CONFIG_BT_CTLR_DATA_LENGTH_MAX=81

The sending device is an nRF5340 acting as a central and has the following parameter:

CONFIG_BT_CTLR_SDC_MAX_CONN_EVENT_LEN_DEFAULT=4000

The data on the other remote devices is relatively low consisting mainly of a heartbeat sent every 500ms.

Can you shed any light on why the scheduler would limit the packets per connection event even though it can send 5x as much with the devices connected?

Can you explain how CONFIG_BT_CTLR_SDC_MAX_CONN_EVENT_LEN_DEFAULT works? We thought that setting it to 4000 limits the time packets can be sent to 4ms; however, that does not seem to be the case since it is in some scenarios seemingly filling the connection event.

Any tips for optimizing for our setup would be appreciated.