Hi there,

I have recently moved to nRF5340 for my audio recording application. I have successfully implemented I2S master on nRF5340 using SPH0465 mems microphone as a slave. As the data comes in, I push it to a ring buffer (using the built-in library in Zephyr). Next I pop the data from the ring buffer and send it asynchronously via UART to the PC. Here are my questions:

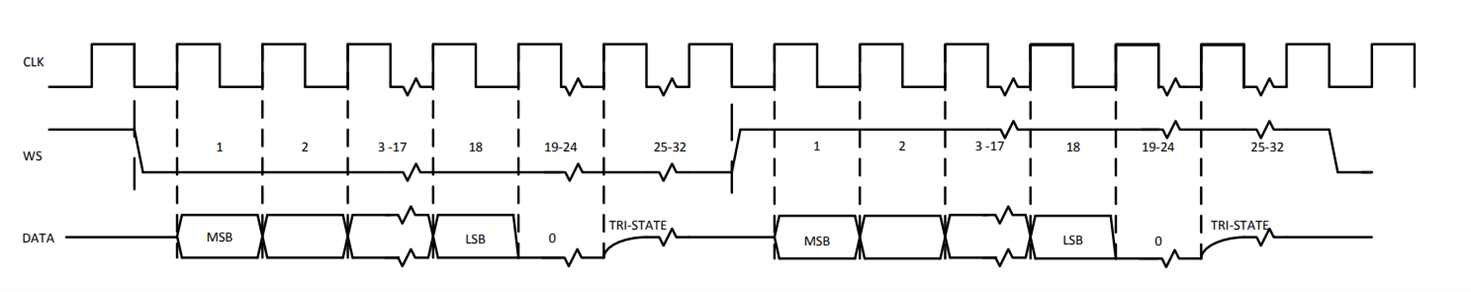

According to the datasheet, the said microphone sends the audio data in packets of four bytes for each channel. Considering a single microphone arrangement, here is the data flow diagram:

That is, the useful data is only coming in for 18 of the 32 bits in the packet, and that too in Big Endian style.

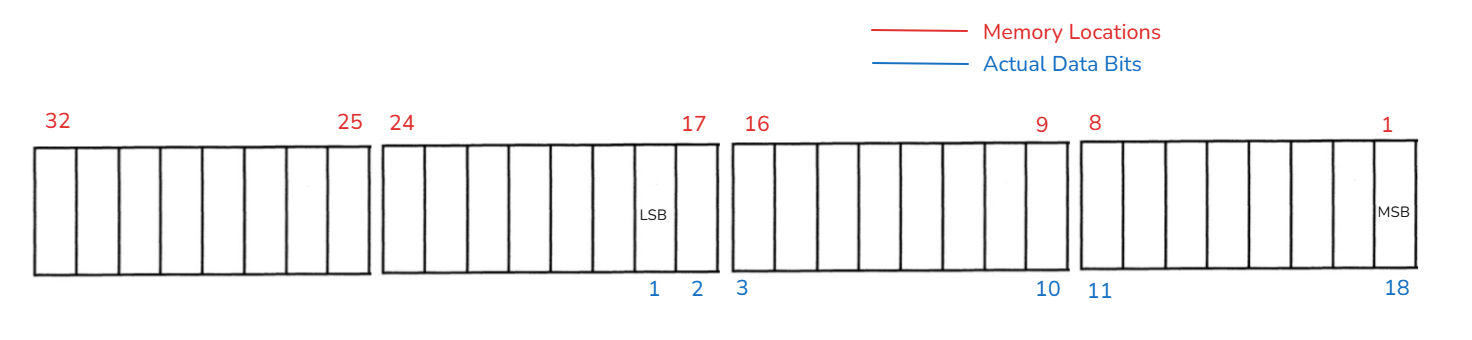

- My understanding is that the data read from microphone on nRF5340 is still stored in big endian format. I am not too sure about it but after observing raw data coming out from UART, I am reasonably sure that for a 32 bit memory location, the incoming data from UART (originally sent from the microphone via I2S) is stored as follows:

As I read data from UART, here are all the things I have tried:

- No change to the Ring Buffer data. Sent all 4 bytes as is to UART. Loaded the data in Audacity as Raw Data, 32-bit signed PCM, Big-Endian. Only hear a single tone in playback. Not the actual audio.

- Logical shifted data two bits to the right, and sent three bytes through UART. Loaded data in Audacity as Raw Data, 16-bit signed PCM, Big Endian. No sound in playback.

- Logical shifted data two bits to the right, and sent two bytes through UART. Loaded data in Audacity as Raw Data, 16-bit signed PCM, Big Endian. No sound in playback.

My ring buffer data looks reasonable. It would not have arrived if the microphone was not working and I dont run into any errors from i2s configuration, and running of the i2s master, either. So I am sure there is nothing wrong with the microphone.

What else could I be missing? Is my understanding of the data pattern incorrect?

Cheers.