Hello world..

please solve my problem..

I wanna to implement this arduino code..

/******************************************************************

* ArduinoANN - An artificial neural network for the Arduino

* All basic settings can be controlled via the Network Configuration

* section.

* See robotics.hobbizine.com/arduinoann.html for details.

******************************************************************/

#include <math.h>

/******************************************************************

* Network Configuration - customized per network

******************************************************************/

const int PatternCount = 10;

const int InputNodes = 7;

const int HiddenNodes = 8;

const int OutputNodes = 4;

const float LearningRate = 0.3;

const float Momentum = 0.9;

const float InitialWeightMax = 0.5;

const float Success = 0.0004;

const byte Input[PatternCount][InputNodes] = {

{ 1, 1, 1, 1, 1, 1, 0 }, // 0

{ 0, 1, 1, 0, 0, 0, 0 }, // 1

{ 1, 1, 0, 1, 1, 0, 1 }, // 2

{ 1, 1, 1, 1, 0, 0, 1 }, // 3

{ 0, 1, 1, 0, 0, 1, 1 }, // 4

{ 1, 0, 1, 1, 0, 1, 1 }, // 5

{ 0, 0, 1, 1, 1, 1, 1 }, // 6

{ 1, 1, 1, 0, 0, 0, 0 }, // 7

{ 1, 1, 1, 1, 1, 1, 1 }, // 8

{ 1, 1, 1, 0, 0, 1, 1 } // 9

};

const byte Target[PatternCount][OutputNodes] = {

{ 0, 0, 0, 0 },

{ 0, 0, 0, 1 },

{ 0, 0, 1, 0 },

{ 0, 0, 1, 1 },

{ 0, 1, 0, 0 },

{ 0, 1, 0, 1 },

{ 0, 1, 1, 0 },

{ 0, 1, 1, 1 },

{ 1, 0, 0, 0 },

{ 1, 0, 0, 1 }

};

/******************************************************************

* End Network Configuration

******************************************************************/

int i, j, p, q, r;

int ReportEvery1000;

int RandomizedIndex[PatternCount];

long TrainingCycle;

float Rando;

float Error;

float Accum;

float Hidden[HiddenNodes];

float Output[OutputNodes];

float HiddenWeights[InputNodes+1][HiddenNodes];

float OutputWeights[HiddenNodes+1][OutputNodes];

float HiddenDelta[HiddenNodes];

float OutputDelta[OutputNodes];

float ChangeHiddenWeights[InputNodes+1][HiddenNodes];

float ChangeOutputWeights[HiddenNodes+1][OutputNodes];

void setup(){

Serial.begin(9600);

randomSeed(analogRead(3));

ReportEvery1000 = 1;

for( p = 0 ; p < PatternCount ; p++ ) {

RandomizedIndex[p] = p ;

}

}

void loop (){

/******************************************************************

* Initialize HiddenWeights and ChangeHiddenWeights

******************************************************************/

for( i = 0 ; i < HiddenNodes ; i++ ) {

for( j = 0 ; j <= InputNodes ; j++ ) {

ChangeHiddenWeights[j][i] = 0.0 ;

Rando = float(random(100))/100;

HiddenWeights[j][i] = 2.0 * ( Rando - 0.5 ) * InitialWeightMax ;

}

}

/******************************************************************

* Initialize OutputWeights and ChangeOutputWeights

******************************************************************/

for( i = 0 ; i < OutputNodes ; i ++ ) {

for( j = 0 ; j <= HiddenNodes ; j++ ) {

ChangeOutputWeights[j][i] = 0.0 ;

Rando = float(random(100))/100;

OutputWeights[j][i] = 2.0 * ( Rando - 0.5 ) * InitialWeightMax ;

}

}

Serial.println("Initial/Untrained Outputs: ");

toTerminal();

/******************************************************************

* Begin training

******************************************************************/

for( TrainingCycle = 1 ; TrainingCycle < 2147483647 ; TrainingCycle++) {

/******************************************************************

* Randomize order of training patterns

******************************************************************/

for( p = 0 ; p < PatternCount ; p++) {

q = random(PatternCount);

r = RandomizedIndex[p] ;

RandomizedIndex[p] = RandomizedIndex[q] ;

RandomizedIndex[q] = r ;

}

Error = 0.0 ;

/******************************************************************

* Cycle through each training pattern in the randomized order

******************************************************************/

for( q = 0 ; q < PatternCount ; q++ ) {

p = RandomizedIndex[q];

/******************************************************************

* Compute hidden layer activations

******************************************************************/

for( i = 0 ; i < HiddenNodes ; i++ ) {

Accum = HiddenWeights[InputNodes][i] ;

for( j = 0 ; j < InputNodes ; j++ ) {

Accum += Input[p][j] * HiddenWeights[j][i] ;

}

Hidden[i] = 1.0/(1.0 + exp(-Accum)) ;

}

/******************************************************************

* Compute output layer activations and calculate errors

******************************************************************/

for( i = 0 ; i < OutputNodes ; i++ ) {

Accum = OutputWeights[HiddenNodes][i] ;

for( j = 0 ; j < HiddenNodes ; j++ ) {

Accum += Hidden[j] * OutputWeights[j][i] ;

}

Output[i] = 1.0/(1.0 + exp(-Accum)) ;

OutputDelta[i] = (Target[p][i] - Output[i]) * Output[i] * (1.0 - Output[i]) ;

Error += 0.5 * (Target[p][i] - Output[i]) * (Target[p][i] - Output[i]) ;

}

/******************************************************************

* Backpropagate errors to hidden layer

******************************************************************/

for( i = 0 ; i < HiddenNodes ; i++ ) {

Accum = 0.0 ;

for( j = 0 ; j < OutputNodes ; j++ ) {

Accum += OutputWeights[i][j] * OutputDelta[j] ;

}

HiddenDelta[i] = Accum * Hidden[i] * (1.0 - Hidden[i]) ;

}

/******************************************************************

* Update Inner-->Hidden Weights

******************************************************************/

for( i = 0 ; i < HiddenNodes ; i++ ) {

ChangeHiddenWeights[InputNodes][i] = LearningRate * HiddenDelta[i] + Momentum * ChangeHiddenWeights[InputNodes][i] ;

HiddenWeights[InputNodes][i] += ChangeHiddenWeights[InputNodes][i] ;

for( j = 0 ; j < InputNodes ; j++ ) {

ChangeHiddenWeights[j][i] = LearningRate * Input[p][j] * HiddenDelta[i] + Momentum * ChangeHiddenWeights[j][i];

HiddenWeights[j][i] += ChangeHiddenWeights[j][i] ;

}

}

/******************************************************************

* Update Hidden-->Output Weights

******************************************************************/

for( i = 0 ; i < OutputNodes ; i ++ ) {

ChangeOutputWeights[HiddenNodes][i] = LearningRate * OutputDelta[i] + Momentum * ChangeOutputWeights[HiddenNodes][i] ;

OutputWeights[HiddenNodes][i] += ChangeOutputWeights[HiddenNodes][i] ;

for( j = 0 ; j < HiddenNodes ; j++ ) {

ChangeOutputWeights[j][i] = LearningRate * Hidden[j] * OutputDelta[i] + Momentum * ChangeOutputWeights[j][i] ;

OutputWeights[j][i] += ChangeOutputWeights[j][i] ;

}

}

}

/******************************************************************

* Every 1000 cycles send data to terminal for display

******************************************************************/

ReportEvery1000 = ReportEvery1000 - 1;

if (ReportEvery1000 == 0)

{

Serial.println();

Serial.println();

Serial.print ("TrainingCycle: ");

Serial.print (TrainingCycle);

Serial.print (" Error = ");

Serial.println (Error, 5);

toTerminal();

if (TrainingCycle==1)

{

ReportEvery1000 = 999;

}

else

{

ReportEvery1000 = 1000;

}

}

/******************************************************************

* If error rate is less than pre-determined threshold then end

******************************************************************/

if( Error < Success ) break ;

}

Serial.println ();

Serial.println();

Serial.print ("TrainingCycle: ");

Serial.print (TrainingCycle);

Serial.print (" Error = ");

Serial.println (Error, 5);

toTerminal();

Serial.println ();

Serial.println ();

Serial.println ("Training Set Solved! ");

Serial.println ("--------");

Serial.println ();

Serial.println ();

ReportEvery1000 = 1;

}

void toTerminal()

{

for( p = 0 ; p < PatternCount ; p++ ) {

Serial.println();

Serial.print (" Training Pattern: ");

Serial.println (p);

Serial.print (" Input ");

for( i = 0 ; i < InputNodes ; i++ ) {

Serial.print (Input[p][i], DEC);

Serial.print (" ");

}

Serial.print (" Target ");

for( i = 0 ; i < OutputNodes ; i++ ) {

Serial.print (Target[p][i], DEC);

Serial.print (" ");

}

/******************************************************************

* Compute hidden layer activations

******************************************************************/

for( i = 0 ; i < HiddenNodes ; i++ ) {

Accum = HiddenWeights[InputNodes][i] ;

for( j = 0 ; j < InputNodes ; j++ ) {

Accum += Input[p][j] * HiddenWeights[j][i] ;

}

Hidden[i] = 1.0/(1.0 + exp(-Accum)) ;

}

/******************************************************************

* Compute output layer activations and calculate errors

******************************************************************/

for( i = 0 ; i < OutputNodes ; i++ ) {

Accum = OutputWeights[HiddenNodes][i] ;

for( j = 0 ; j < HiddenNodes ; j++ ) {

Accum += Hidden[j] * OutputWeights[j][i] ;

}

Output[i] = 1.0/(1.0 + exp(-Accum)) ;

}

Serial.print (" Output ");

for( i = 0 ; i < OutputNodes ; i++ ) {

Serial.print (Output[i], 5);

Serial.print (" ");

}

}

}

with this output..

and convert to this mbed compiler, like this one..

#include <math.h>

#include <mbed.h>

Serial pc(USBTX, USBRX);

const int PatternCount = 10;

const int InputNodes = 7;

const int HiddenNodes = 8;

const int OutputNodes = 4;

const float LearningRate = 0.3;

const float Momentum = 0.9;

const float InitialWeightMax = 0.5;

const float Success = 0.0004;

const byte Input[PatternCount][InputNodes] = {

{ 1, 1, 1, 1, 1, 1, 0 }, // 0

{ 0, 1, 1, 0, 0, 0, 0 }, // 1

{ 1, 1, 0, 1, 1, 0, 1 }, // 2

{ 1, 1, 1, 1, 0, 0, 1 }, // 3

{ 0, 1, 1, 0, 0, 1, 1 }, // 4

{ 1, 0, 1, 1, 0, 1, 1 }, // 5

{ 0, 0, 1, 1, 1, 1, 1 }, // 6

{ 1, 1, 1, 0, 0, 0, 0 }, // 7

{ 1, 1, 1, 1, 1, 1, 1 }, // 8

{ 1, 1, 1, 0, 0, 1, 1 } // 9

};

const byte Target[PatternCount][OutputNodes] = {

{ 0, 0, 0, 0 },

{ 0, 0, 0, 1 },

{ 0, 0, 1, 0 },

{ 0, 0, 1, 1 },

{ 0, 1, 0, 0 },

{ 0, 1, 0, 1 },

{ 0, 1, 1, 0 },

{ 0, 1, 1, 1 },

{ 1, 0, 0, 0 },

{ 1, 0, 0, 1 }

};

int i, j, p, q, r;

int ReportEvery1000;

int RandomizedIndex[PatternCount];

long TrainingCycle;

float Rando;

float Error;

float Accum;

float Hidden[HiddenNodes];

float Output[OutputNodes];

float HiddenWeights[InputNodes+1][HiddenNodes];

float OutputWeights[HiddenNodes+1][OutputNodes];

float HiddenDelta[HiddenNodes];

float OutputDelta[OutputNodes];

float ChangeHiddenWeights[InputNodes+1][HiddenNodes];

float ChangeOutputWeights[HiddenNodes+1][OutputNodes];

int main()

{

pc.baud(9600);

randomSeed(analogRead(3));

ReportEvery1000 = 1;

for( p = 0 ; p < PatternCount ; p++ )

{

RandomizedIndex[p] = p ;

}

void loop ()

{

for( i = 0 ; i < HiddenNodes ; i++ )

{

for( j = 0 ; j <= InputNodes ; j++ )

{

ChangeHiddenWeights[j][i] = 0.0 ;

Rando = float(random(100))/100;

HiddenWeights[j][i] = 2.0 * ( Rando - 0.5 ) * InitialWeightMax ;

}

}

for( i = 0 ; i < OutputNodes ; i ++ )

{

for( j = 0 ; j <= HiddenNodes ; j++ )

{

ChangeOutputWeights[j][i] = 0.0 ;

Rando = float(random(100))/100;

OutputWeights[j][i] = 2.0 * ( Rando - 0.5 ) * InitialWeightMax ;

}

}

pc.printf("Initial/Untrained Outputs: \n");

toTerminal();

for( TrainingCycle = 1 ; TrainingCycle < 2147483647 ; TrainingCycle++)

{

for( p = 0 ; p < PatternCount ; p++)

{

q = random(PatternCount);

r = RandomizedIndex[p] ;

RandomizedIndex[p] = RandomizedIndex[q] ;

RandomizedIndex[q] = r ;

}

Error = 0.0 ;

for( q = 0 ; q < PatternCount ; q++ )

{

p = RandomizedIndex[q];

for( i = 0 ; i < HiddenNodes ; i++ )

{

Accum = HiddenWeights[InputNodes][i] ;

for( j = 0 ; j < InputNodes ; j++ )

{

Accum += Input[p][j] * HiddenWeights[j][i] ;

}

Hidden[i] = 1.0/(1.0 + exp(-Accum)) ;

}

for( i = 0 ; i < OutputNodes ; i++ )

{

Accum = OutputWeights[HiddenNodes][i] ;

for( j = 0 ; j < HiddenNodes ; j++ )

{

Accum += Hidden[j] * OutputWeights[j][i] ;

}

Output[i] = 1.0/(1.0 + exp(-Accum)) ;

OutputDelta[i] = (Target[p][i] - Output[i]) * Output[i] * (1.0 - Output[i]) ;

Error += 0.5 * (Target[p][i] - Output[i]) * (Target[p][i] - Output[i]) ;

}

for( i = 0 ; i < HiddenNodes ; i++ )

{

Accum = 0.0 ;

for( j = 0 ; j < OutputNodes ; j++ )

{

Accum += OutputWeights[i][j] * OutputDelta[j] ;

}

HiddenDelta[i] = Accum * Hidden[i] * (1.0 - Hidden[i]) ;

}

for( i = 0 ; i < HiddenNodes ; i++ )

{

ChangeHiddenWeights[InputNodes][i] = LearningRate * HiddenDelta[i] + Momentum * ChangeHiddenWeights[InputNodes][i] ;

HiddenWeights[InputNodes][i] += ChangeHiddenWeights[InputNodes][i] ;

for( j = 0 ; j < InputNodes ; j++ )

{

ChangeHiddenWeights[j][i] = LearningRate * Input[p][j] * HiddenDelta[i] + Momentum * ChangeHiddenWeights[j][i];

HiddenWeights[j][i] += ChangeHiddenWeights[j][i] ;

}

}

for( i = 0 ; i < OutputNodes ; i ++ )

{

ChangeOutputWeights[HiddenNodes][i] = LearningRate * OutputDelta[i] + Momentum * ChangeOutputWeights[HiddenNodes][i] ;

OutputWeights[HiddenNodes][i] += ChangeOutputWeights[HiddenNodes][i] ;

for( j = 0 ; j < HiddenNodes ; j++ )

{

ChangeOutputWeights[j][i] = LearningRate * Hidden[j] * OutputDelta[i] + Momentum * ChangeOutputWeights[j][i] ;

OutputWeights[j][i] += ChangeOutputWeights[j][i] ;

}

}

}

ReportEvery1000 = ReportEvery1000 - 1;

if (ReportEvery1000 == 0)

{

pc.printf ("TrainingCycle: ");

pc.printf ("%i",(int16_t)TrainingCycle);

pc.printf (" Error = ");

pc.printf ("%i\n",(int16_t)Error, 5);

toTerminal();

if (TrainingCycle==1)

{

ReportEvery1000 = 999;

}

else

{

ReportEvery1000 = 1000;

}

}

if( Error < Success ) break ;

}

pc.printf ("TrainingCycle: ");

pc.printf ("%i",(int16_t)TrainingCycle);

pc.printf (" Error = ");

pc.printf ("%i\n",(int16_t)Error, 5);

toTerminal();

pc.printf ("Training Set Solved!\n");

pc.printf ("--------\n");

ReportEvery1000 = 1;

}

}

void toTerminal()

{

for( p = 0 ; p < PatternCount ; p++ ) {

pc.printf (" Training Pattern: ");

pc.printf ("%i\n",(int16_t)p);

pc.printf (" Input ");

for( i = 0 ; i < InputNodes ; i++ ) {

pc.printf ("%i,%i",(int16_t)Input[p][i],(int16_t)DEC);

pc.printft (" ");

}

pc.printf (" Target ");

for( i = 0 ; i < OutputNodes ; i++ ) {

pc.printf ("%i,%i",(int16_t)Input[p][i],(int16_t)DEC);

Serial.print (" ");

}

/******************************************************************

* Compute hidden layer activations

******************************************************************/

for( i = 0 ; i < HiddenNodes ; i++ ) {

Accum = HiddenWeights[InputNodes][i] ;

for( j = 0 ; j < InputNodes ; j++ ) {

Accum += Input[p][j] * HiddenWeights[j][i] ;

}

Hidden[i] = 1.0/(1.0 + exp(-Accum)) ;

}

/******************************************************************

* Compute output layer activations and calculate errors

******************************************************************/

for( i = 0 ; i < OutputNodes ; i++ ) {

Accum = OutputWeights[HiddenNodes][i] ;

for( j = 0 ; j < HiddenNodes ; j++ ) {

Accum += Hidden[j] * OutputWeights[j][i] ;

}

Output[i] = 1.0/(1.0 + exp(-Accum)) ;

}

pc.printf (" Output ");

for( i = 0 ; i < OutputNodes ; i++ ) {

pc.printf ("%i",Output[i], 5);

pc.printf (" ");

}

}

}

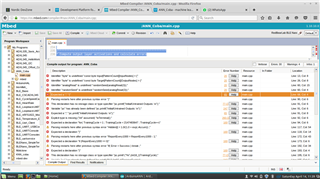

but there are several message problem like this one,

so please help me soon, this is the my mbed code..ANN mbed

thanks for your kindness..