I'm experiencing a strange issue with the USB CDC example when connecting the nRF52840 to Windows 10. This is with the 15.2 SDK. I'm using the usbd_ble_uart example on the BMD340 DK. When building the stock example and connecting the BMD-USB port to my PC the DK connects as a virtual comm port as expected. I can open it with a terminal emulator and see that data is being transferred. I'm using this example project to build software to connect to a custom application. If my custom application tries to talk to the DK BEFORE I test the connection with the terminal emulator then the custom application fails to be able to send data to the virtual comm port. Simply opening the port and then closing it with the terminal emulator puts the DK port in a "better" state and then the custom application is able to talk with the port.

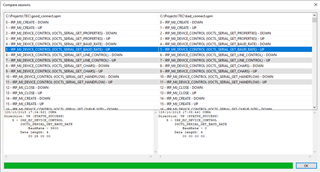

It smelled like an OS issue so I fired up a serial port sniffer and see what was being sent by the different connection attempts. When the custom application tries to read the baud rate and data width from the virtual comm port BEFORE the terminal emulator has used the port it receives a baud rate of 0 and a data width of 0. Once the terminal emulator has opened and closed the connection and I re-sniff the session the baud rate is 9600 and the data with is 8 as expected. Please see the attached screen captures of side-by side comparisons of good and bad connections (Good on left, bad on right. First baud then data width):

I've seen this issue on multiple PCs. I've also tried updating the windows USB CDC driver with the nordic_cdc_acm_examples.inf file. Somehow, for the initial connection, either the nRF5280's USB stack is in a strange state or the virtual comm port is in a weird state and it there can be no good connection until the terminal emulator makes a connection. Using the same port sniffing software I can see that the terminal emulator doesn't check the current values, it just writes the values it wants and moves forward.

So my questions are:

- If it's the nRF52's USB stack, is there a way to pre-set it so that it returns the correct baud rate and data with on the initial connection?

- If it's the virtual comm port, is there any way to set/reset it from the nRF52 side so that it's in the correct state for the initial connection?

Thanks

.