Hi to all,

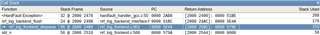

I have found that using SDK17.0.0 on NRF52832, whenever I try to use NRF_LOG_HEXDUMP_DEBUG for a large buffer (more than a 1000 elements), I get a Data Access Violation Fault.

I understand that since the NRF_LOG is deferred and my buffer is allocated in stack, I can get the buffer pointer to be corrupted.

Aware of that, after calling the NRF_LOG_HEXDUMP_DEBUG I call the NRF_LOG_FINAL_FLUSH, in order to fully flush the logs.

I have also changed the NRF_LOG_BUFSIZE to 4096, in order to fully store my buffer (1800 bytes)

I have also noticed that the output of my calls to NRF_LOG_HEXDUMP_DEBUG are capped at 80 bytes (8 columns x 10 rows), even if the buffer contains more data and and the length argument of the NRF_LOG_HEXDUMP_DEBUG call is more than 80 bytes.

Below is a code snippet. I have tried to dump the buffer immediately after creation (even if it is all zeroes), just to remove the possibility of errors in functions that manipulate the buffer contents. Still getting Data Access Violation Fault.

uint8_t cert_buffer[1800] = {0};

NRF_LOG_HEXDUMP_INFO(cert_buffer, 1800);

NRF_LOG_FINAL_FLUSH();

Am I missing something or is this a bug? Can anyone reproduce this behavior?

Thanks in advance,

Pedro