Hi,

I would like to use the PDM interface for my application in nrf52840.

I configured the driver, and started using the SDK's API successfully.

Question 1:

During the event: (p_evt->buffer_released), As part of development, I am exploring some different possibilities to handle the data buffer.

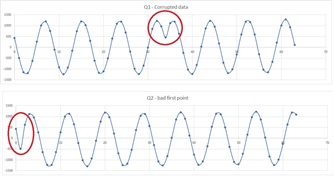

I see that if I take too long inside the function the data is corrupted. My initial suspicion was that that (p_evt->buffer_requested) was called during the time the CPU is handling (p_evt->buffer_released) (perhaps to keep a continuous measurements) and since I always send the same buffer, it was overridden. . However, I debugged and saw that (p_evt->buffer_requested) was not started until (p_evt->buffer_released) was done, which contradicts this hypothesis.

What is causing the data to be corrupted if I spend too long inside the (p_evt->buffer_released) function - is the HW still using the pointer I supplied before to do other things?

More importantly, How much time is allowed inside (p_evt->buffer_released) before the data becomes unreliable, as I explained above.

Question 2:

In some cases, 1-2 measurements at the begging of the buffer are not good (a perfect sinus is defected at the first measurements). Is this some known stabilization issue? something else?

2 Pictures below are the recorded results for the 2 questions respectively

Thanks,

Alon Barak

Qcore medical