Hello,

I would appreciate some guidance about BLE connection stability. Our product is a system of 3 peripherals (custom board with Laird's BL652 module, containing nRF52832) all simultaneously connected to our iOS app (central). The peripheral application is written with Nordic SDK 15.3 plus S112 v6.1.1, based initially on the ble_app_uart example. Our iOS app uses Apple's Core Bluetooth framework and currently supports iOS 9.3 or newer.

Each peripheral has a sensor and uses GPIOTE, PPI and TIMERs to timestamp events and send to the connected central via NUS. Events occur randomly, sometimes with a minute or two between events, and sometimes multiple events occur in quick succession. The clocks (TIMER2) on the three peripherals are synchronized using the Timeslot API based on a simplified version of this Nordic blog. The timeslot length is 1000 microseconds.

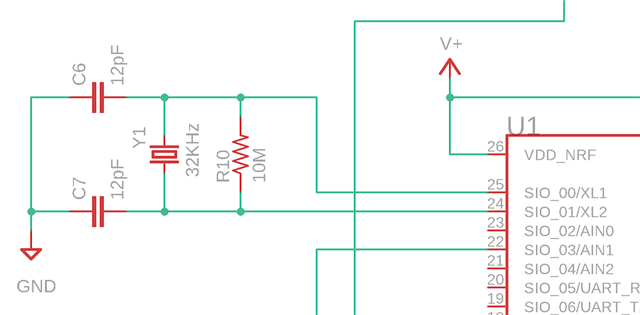

For higher accuracy, our peripheral app requests the high frequency clock (sd_clock_hfclk_request()), which I understand sets the clock source to XTAL. The BL652 has an integrated high accuracy 32 MHz (±10 ppm) crystal oscillator.

This is a mobile system that is set up with each peripheral at most 150 feet apart (open space, line-of-sight). Each peripheral is powered by 2 AA batteries. For testing, we set up three systems to test concurrent use of multiple 3-peripheral systems connected to multiple iOS devices. Each of the three systems (each comprising of 3 peripherals) uses a different radio address for the time sync using the Timeslot API.

Connection parameters for the peripherals are:

#define MIN_CONN_INTERVAL MSEC_TO_UNITS(30, UNIT_1_25_MS)

#define MAX_CONN_INTERVAL MSEC_TO_UNITS(75, UNIT_1_25_MS)

#define SLAVE_LATENCY 0

#define CONN_SUP_TIMEOUT MSEC_TO_UNITS(4000, UNIT_10_MS)

#define FIRST_CONN_PARAMS_UPDATE_DELAY APP_TIMER_TICKS(5000)

#define NEXT_CONN_PARAMS_UPDATE_DELAY APP_TIMER_TICKS(30000)

#define MAX_CONN_PARAMS_UPDATE_COUNT 3

I understand these conform to Apple's requirements. We do NOT need high throughput and send only small amounts of data (<20 bytes) between central and peripherals. In sdk_config.h, NRF_SDH_BLE_GATT_MAX_MTU_SIZE is 23. In ble_evt_handler(), on BLE_GAP_EVT_CONNECTED, I set transmit power level to 4db:

err_code = sd_ble_gap_tx_power_set(BLE_GAP_TX_POWER_ROLE_CONN, m_conn_handle, 4);

Using a BLE sniffer, for iOS 13.5.1 on an iPhone SE (2020 model) we see the following CONNECT_REQ packet:

Bluetooth Low Energy Link Layer

Access Address: 0x8e89bed6

Packet Header: 0x22c5 (PDU Type: CONNECT_REQ, ChSel: #1, TxAdd: Random, RxAdd: Random)

Initator Address: 5c:d1:b4:78:4e:43 (5c:d1:b4:78:4e:43)

Advertising Address: d5:88:07:32:7a:ad (d5:88:07:32:7a:ad)

Link Layer Data

Access Address: 0x50654a99

CRC Init: 0x28ce8e

Window Size: 3 (3.75 msec)

Window Offset: 7 (8.75 msec)

Interval: 24 (30 msec)

Latency: 0

Timeout: 72 (720 msec)

Channel Map: ff07c0ff1f

.... ...1 = RF Channel 1 (2404 MHz - Data - 0): True

.... ..1. = RF Channel 2 (2406 MHz - Data - 1): True

.... .1.. = RF Channel 3 (2408 MHz - Data - 2): True

.... 1... = RF Channel 4 (2410 MHz - Data - 3): True

...1 .... = RF Channel 5 (2412 MHz - Data - 4): True

..1. .... = RF Channel 6 (2414 MHz - Data - 5): True

.1.. .... = RF Channel 7 (2416 MHz - Data - 6): True

1... .... = RF Channel 8 (2418 MHz - Data - 7): True

.... ...1 = RF Channel 9 (2420 MHz - Data - 8): True

.... ..1. = RF Channel 10 (2422 MHz - Data - 9): True

.... .1.. = RF Channel 11 (2424 MHz - Data - 10): True

.... 0... = RF Channel 13 (2428 MHz - Data - 11): False

...0 .... = RF Channel 14 (2430 MHz - Data - 12): False

..0. .... = RF Channel 15 (2432 MHz - Data - 13): False

.0.. .... = RF Channel 16 (2434 MHz - Data - 14): False

0... .... = RF Channel 17 (2436 MHz - Data - 15): False

.... ...0 = RF Channel 18 (2438 MHz - Data - 16): False

.... ..0. = RF Channel 19 (2440 MHz - Data - 17): False

.... .0.. = RF Channel 20 (2442 MHz - Data - 18): False

.... 0... = RF Channel 21 (2444 MHz - Data - 19): False

...0 .... = RF Channel 22 (2446 MHz - Data - 20): False

..0. .... = RF Channel 23 (2448 MHz - Data - 21): False

.1.. .... = RF Channel 24 (2450 MHz - Data - 22): True

1... .... = RF Channel 25 (2452 MHz - Data - 23): True

.... ...1 = RF Channel 26 (2454 MHz - Data - 24): True

.... ..1. = RF Channel 27 (2456 MHz - Data - 25): True

.... .1.. = RF Channel 28 (2458 MHz - Data - 26): True

.... 1... = RF Channel 29 (2460 MHz - Data - 27): True

...1 .... = RF Channel 30 (2462 MHz - Data - 28): True

..1. .... = RF Channel 31 (2464 MHz - Data - 29): True

.1.. .... = RF Channel 32 (2466 MHz - Data - 30): True

1... .... = RF Channel 33 (2468 MHz - Data - 31): True

.... ...1 = RF Channel 34 (2470 MHz - Data - 32): True

.... ..1. = RF Channel 35 (2472 MHz - Data - 33): True

.... .1.. = RF Channel 36 (2474 MHz - Data - 34): True

.... 1... = RF Channel 37 (2476 MHz - Data - 35): True

...1 .... = RF Channel 38 (2478 MHz - Data - 36): True

..0. .... = RF Channel 0 (2402 MHz - Reserved for Advertising - 37): False

.0.. .... = RF Channel 12 (2426 MHz - Reserved for Advertising - 38): False

0... .... = RF Channel 39 (2480 MHz - Reserved for Advertising - 39): False

...0 1111 = Hop: 15

001. .... = Sleep Clock Accuracy: 151 ppm to 250 ppm (1)

CRC: 0x419071

So we have 9 peripherals, 3 connected to one iOS device, 3 connected to another iOS device, and 3 connected to yet another iOS device. I'm noticing random disconnects. On the iOS side, centralManager(_:didDisconnectPeripheral:error:) is reporting error 6 which is "The connection has timed out.".

Have we bumped into some of the practical limits of BLE? I did a sniffer trace that captured a random disconnect. See attached (Wireshark with nRF52 DK and nRF Sniffer 3.0.0). I notice a lot of LL_CHANNEL_MAP_REQ packets, but I don't have much knowledge of this level of BLE. Is there anything we can do to increase connection stability? We request a 4 second supervisor timeout but the central chooses 720 milliseconds. Use higher min and max connection interval? Our central app generally uses writeWithRespnse when writing characteristic values.

Appreciate any information, guidance.

Many thanks,

Tim