Hi,

I used one nRF52840 dongle and one DK, and placed one sniffer in between to caputure the packets.

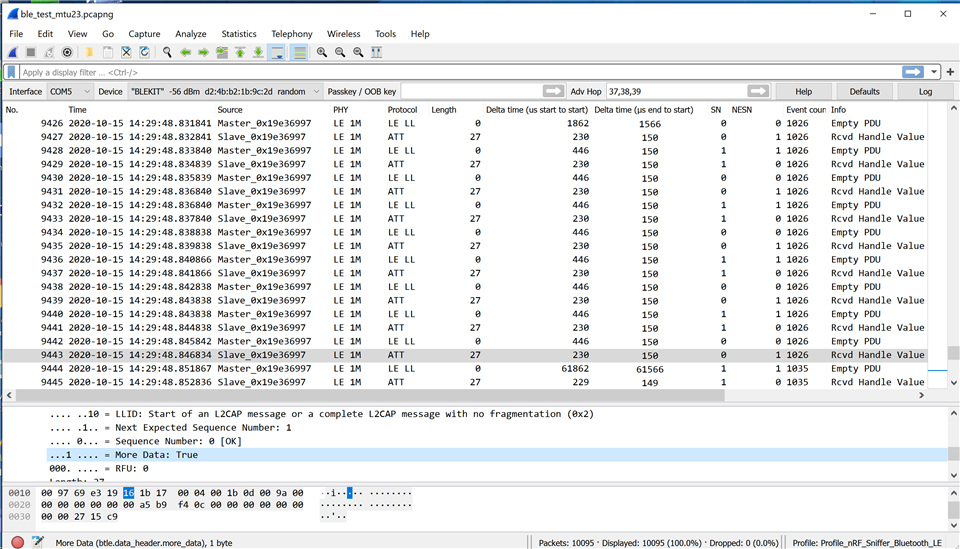

The connection interval was set at 7.5 msec, GAP event length is 6 (which is 7.5 msec), MTU is 23 and data length is 27, SoftDevice s140 7.2.0.

Below sniffer captured, shows

- 9 packets in one connection interval

- the highlighted slave response with MD=1, more data, but master didn't reply, instead it waited 61 msec

I read a few Nordic articles describe the maximum packets per connection interval is 6, but there is 9 in this case. Also why master ignored slave's MD=1 packet, instead waited 61 msec.

Could you help me to understand it?

Also, we are trying to achive highest packet per second, so in theory if we set the MTU small enoough and we use 7.5 msec connection interval, can we achive 6 packets per connection interval and reach 800 packets per second between two devices?

1000 / 7.5 * 6 = 800 PPS