Hi, as part of my project i have 2 nordic 52dks one as a pheripheral (sending) and on as a client (recieving). where i am sening a buffer of 8, in the order

buffer[0] = 10

buffer[1}= X gyro lsb

buffer[2] = X gyro msb

buffer[3] = y gyro lsb

buffer[4] = y gyro msb

buffer[5] = z gyro lsb

buffer[6] = z gyro msb

buffer[7] = 36 //terminator $

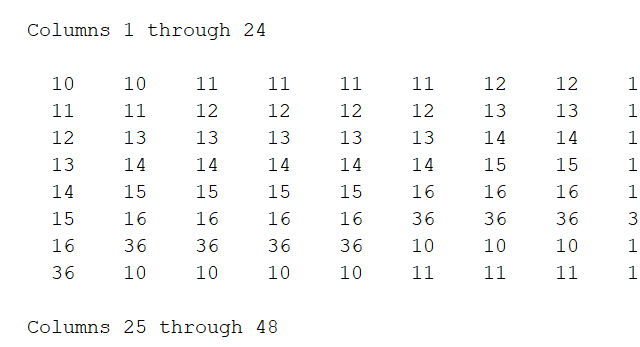

however sometimes i seem to lose a byte over ble such that the next byte received will be less and hence the order is correupted thus making processing rather and unpredictable for a large sample size like 1000000 samples.

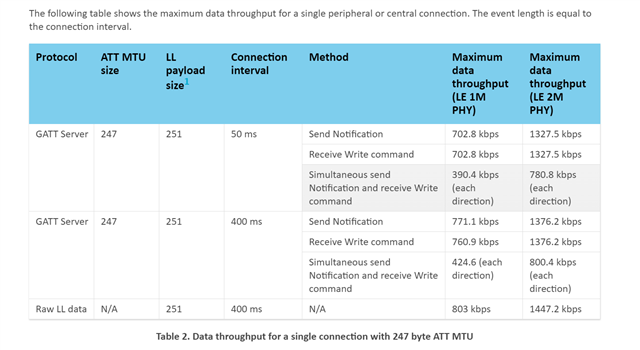

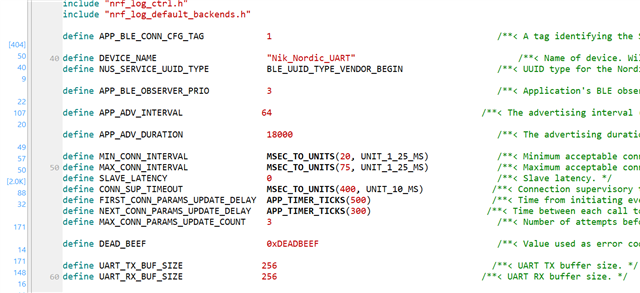

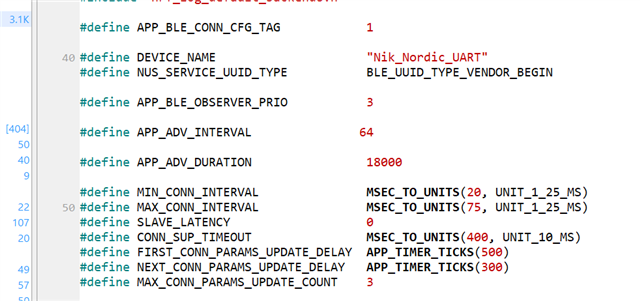

how may i ensure that my ble settings are correct such that i ensure to limit this issue.

my code was based of the examples ble_app_uart and ble_app_uart_c for the peripheral and client respectivly.

thank u in advance