setup, I have a 3.3 V battery divided down to the bandgap range using a 56k and 10k resistors, so the voltage at the ADC input is 3.3 * (10/66) = 0.5 V,but the fact is 0.498V

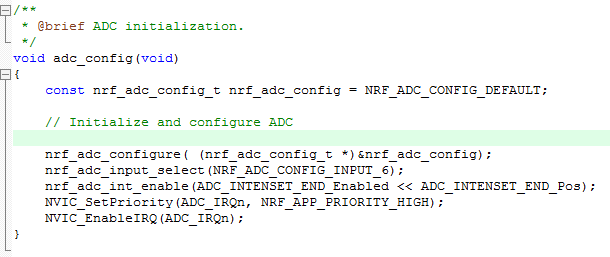

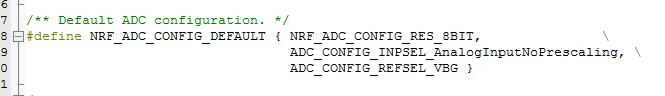

I've configured the ADC to convert at 8 bits, to use no prescaler, and to use the internal VBG as reference.

The result i get is 0x6A, or 106, which translates to a measured battery voltage of: (106/256) * 1.2 = 0.497V

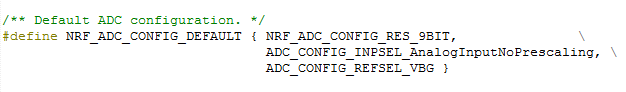

I've configured the ADC to convert at 9 bits, to use no prescaler, and to use the internal VBG as reference. The result i get is 0xD3, or 211, which translates to a measured battery voltage of: (211/512) * 1.2 = 0.495V

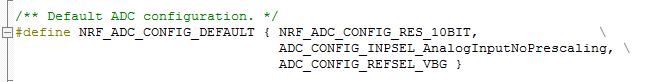

I've configured the ADC to convert at 10 bits, to use no prescaler, and to use the internal VBG as reference. The result i get is 0xA7, or 167, which translates to a measured battery voltage of: (167/1024) * 1.2 = 0.196V

The experimental results showed that the higher the digits of the ADC, the lower the accuracy,who can tell me why?

Thanks in advance.

(/attachment/812a68ec1416b4b3bbb18257b6025536)

(/attachment/812a68ec1416b4b3bbb18257b6025536)

(http://)

(http://)