Hello DevZone,

I have a project where I want to use NFCT for communicating and charging. I have used the NRFX_NFCT library to read and write to my transmitter because my transmitter does not support using NDEF messages.

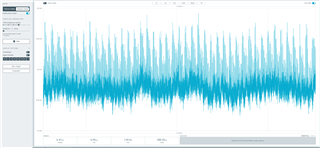

When the NFC field is on and my microcontroller indicates that it is selected I see a very large current drain using the power profiler.

I cannot explain or find out where all this current go to. In normal situations this current draw is no issue except for when the system is starting up.

I have tried using the profiler on the writable NDEF example project to compare the current draw but that was even worst.

Can anyone explain where all the current is flowing to and maybe help to optimize (if that is possible at all)?

Reading the electrical specifications in the datasheet I would have assumed that the current drain would be something like 500uA.

Kind regards,

Tom