I am seeing cases where the More Data (MD) bit is not set in the LLID Start packet when it is followed by a LLID Continuation packet. I can see this happen when the max packet data length (maxTxOctets and maxRxOctets) is 251 bytes and the ATT payload is greater than 251 bytes, requiring at least one packet of 251 bytes to be transmitted. I do not see this happen if I simply lower the maxOctets to 250 bytes. Instead, at 250 bytes, I see a ATT payload excessively broken up into 250 bytes + 1 byte + the remaining bytes (for example, 18 remaining bytes).

For the data below:

- NCS 2.0

- Nordic Bluetooth controller

- Custom board with nRF5340 acting as a peripheral/GATT server

- PHY 1M

- Connection Interval 30 msec.

- MTU 500 bytes.

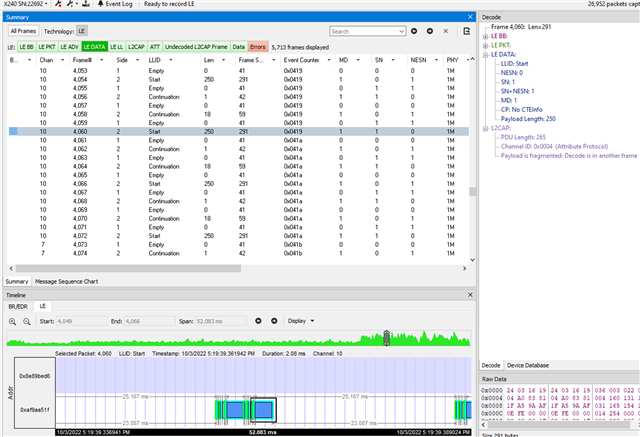

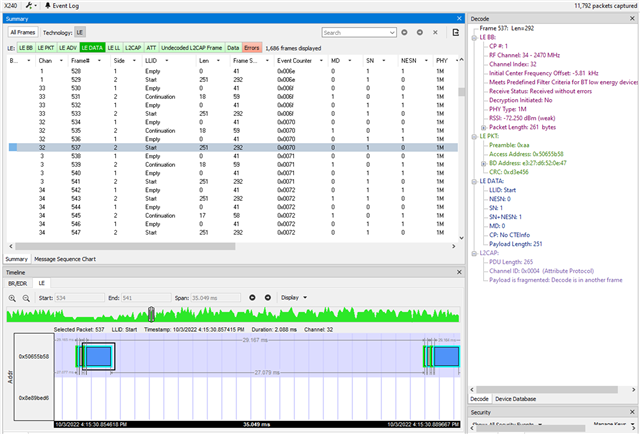

In the screenshot below of an X240 sniffer capture, we are interested in the peripheral transmission, Side 2, consisting of GATT Notifications.

- Frame 537 (highlighted) is an LLID Start of 251 bytes. It’s MD bit is not set, as shown in the MD column.

- Frame 539 is an LLID Continuation of 18 bytes, and concludes the upper layer transmission (in this case, a GATT Notification requiring 251+18 bytes).

Because frame 537 does not have the MD bit set, the central stops the connection event, causing frame 539 (LLID Continuation) to be deferred to the next connection interval, reducing throughput. We typically see the central allow the connection event to continue if the MD bit is set at this duration into the connection event.

Question 1: Shouldn’t frame 537 have the MD bit set since it’s followed by an LLID Continuation?

On the other hand,

- Frame 543 is another LLID Start of 251 bytes followed by an LLID Continuation. In this case, MD bit is set, as expected, and both frames are able to be sent in the same connection interval.

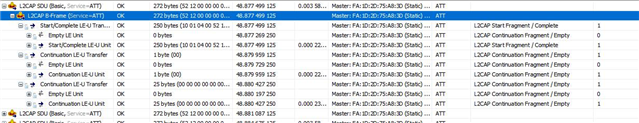

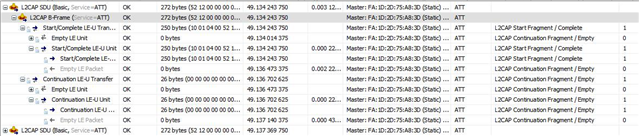

With max data length set to 250 bytes (instead of 251), from spot checking, I think all LLID Starts that are followed by LLID Continuation do indeed have the MD bit set. But I see peculiar behavior as shown below from another log:

- Frame 4,060 (highlighted) is an LLID Start of 250 bytes. It’s MD bit is set, but the central chose to end the connection event anyway here (a typical stopping point, timewise, for the central being used).

- Frame 4,062 is an LLID Continuation of just 1 byte, followed by yet another LLID Continuation of 18 bytes in frame 4064.

Question 2: Why are frames 4062 and 4064 broken up? Isn’t this inefficient for throughput?