This is part 3 of the series Building a Bluetooth application on nRF Connect SDK

You can find other parts here:

Part 1 - Peripheral Role.

Part 2 - Central Role.

In Part 1 we covered the generic architecture, the peripheral role and GATT Server. In Part 2 we discussed the Central Role and the GATT client.

Part 3 will analyze the options to optimize the connection for latency, power consumption, and throughput. We provided an NUS throughput demo that you can use as a reference design.

Table of Contents

1. Control the connection parameters.

1.1 Background

We start with a short description of the connection parameters:

-

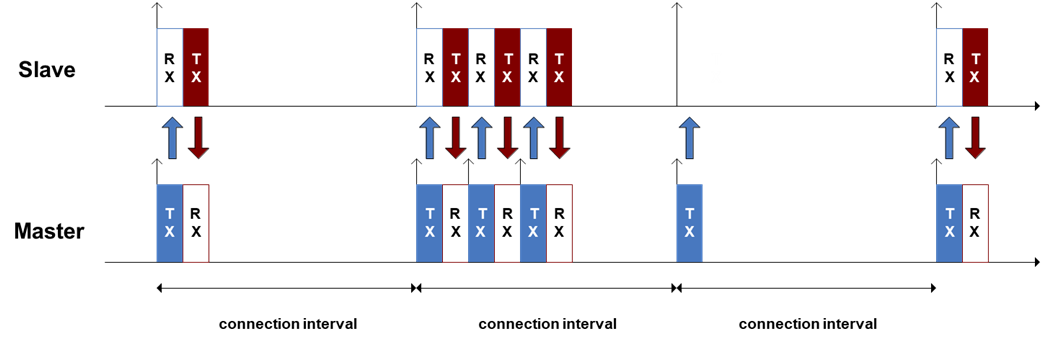

Interval: defines the interval of the connection. How frequently the master/central will send a connection event packet to slave. The unit of connection interval is 1.25ms.

-

Latency: Slave latency. The slave/peripheral can skip waking up and respond to the connection event from master to slave. The latency is the number of connect event the slave can skip. This is to save power on the slave side. When it has no data it can skip some connection events. But the sleeping period should not be too long so that the connection will timeout.

-

Timeout: How long would the master keeps sending connection event without a response from the slave before the connection is terminated.

2.2 Controlling the connection parameters

In a Zephyr Bluetooth LE central application, if you don't set the connection parameter when initializing scanning, the default connection parameter will be used:

Connection Interval: min30-max50 ms Latency: 0 Timeout: 4 s. (BT_LE_CONN_PARAM_DEFAULT)

This values are good for many applications; it's a good balance between shorter latency and not too power-hungry. But if you need to change that, you can either set your own connection parameter as an input to the bt_scan_init() or modify the definition of BT_LE_CONN_PARAM_DEFAULT in conn.h.

An example of setting your own connection parameters with low latency (you can use this in bt_scan_init() and bt_conn_le_create() ):

//minimum connection interval = maximum connection interval = 6*1.25 = 7.5ms //slave latency = 15 //Connection timeout = 30 * 10 = 300ms #define BT_LE_MY_CONN_PARAM BT_LE_CONN_PARAM(6, 6, 15, 30)

On the peripheral side, if you leave CONFIG_BT_GAP_AUTO_UPDATE_CONN_PARAMS=y, the connection parameters request will be sent automatically 5 seconds after the connection is established. The reason it's sent 5 seconds after is to leave enough time for the connection running at lower latency (30-50ms for example) to complete service discovery quickly. If you immediately set the connection interval to says 1000ms, it will take pretty long to finish service discovery. The default connection parameters are similar to the ones on central with the exception of the connection timeout is set at 420ms. You can redefine this value in your project config/KConfig:

CONFIG_BT_PERIPHERAL_PREF_MIN_INT CONFIG_BT_PERIPHERAL_PREF_MAX_INT CONFIG_BT_PERIPHERAL_PREF_LATENCY CONFIG_BT_PERIPHERAL_PREF_TIMEOUT

static struct bt_le_conn_param *conn_param = BT_LE_CONN_PARAM(INTERVAL_MIN, INTERVAL_MAX, 0, 400);

static int update_connection_parameters(void)

{

int err;

err = bt_conn_le_param_update(current_conn, conn_param);

if (err) {

LOG_ERR("Cannot update conneciton parameter (err: %d)", err);

return err;

}

LOG_INF("Connection parameters update requested");

return 0;

}

//callback

static void conn_params_updated(struct bt_conn *conn, uint16_t interval, uint16_t latency, uint16_t timeout)

{

LOG_INF("Conn params updated: interval %d unit, latency %d, timeout: %d0 ms",interval, latency, timeout);

}2. Optimize the connection for throughput - NUS throughput example

2.1 Background

2.2 NUS throughput example

In nRF Connect SDK we has a throughput example that you can use to benchmark the BLE throughput with different configurations, including configuration of interval, ATT_MTU, data length and PHY. However, the example requires you to run the firmware on both sides of the connection and it may not be the same if you only control one side of the connection, for example when connecting to a phone.

static void request_mtu_exchange(void)

{ int err;

static struct bt_gatt_exchange_params exchange_params;

exchange_params.func = MTU_exchange_cb;

err = bt_gatt_exchange_mtu(current_conn, &exchange_params);

if (err) {

LOG_WRN("MTU exchange failed (err %d)", err);

} else {

LOG_INF("MTU exchange pending");

}

}

static void request_data_len_update(void)

{

int err;

err = bt_conn_le_data_len_update(current_conn, BT_LE_DATA_LEN_PARAM_MAX);

if (err) {

LOG_ERR("LE data length update request failed: %d", err);

}

}

static void request_phy_update(void)

{

int err;

err = bt_conn_le_phy_update(current_conn, BT_CONN_LE_PHY_PARAM_2M);

if (err) {

LOG_ERR("Phy update request failed: %d", err);

}

}#GATT_CLIENT needed for requesting ATT_MTU update CONFIG_BT_GATT_CLIENT=y #PHY update needed for updating PHY request CONFIG_BT_USER_PHY_UPDATE=y #For data length update CONFIG_BT_USER_DATA_LEN_UPDATE=y #This is the maximum data length with Nordic Softdevice controller CONFIG_BT_CTLR_DATA_LENGTH_MAX=251 #These buffers are needed for the data length max. CONFIG_BT_BUF_ACL_TX_SIZE=251 CONFIG_BT_BUF_ACL_RX_SIZE=251 #This is the maximum MTU size with Nordic Softdevice controller CONFIG_BT_L2CAP_TX_MTU=247

The example's main task is to send 300 notification packets as fast as possible, each packet has the maximum size of the allowed data length (24 bytes by default).

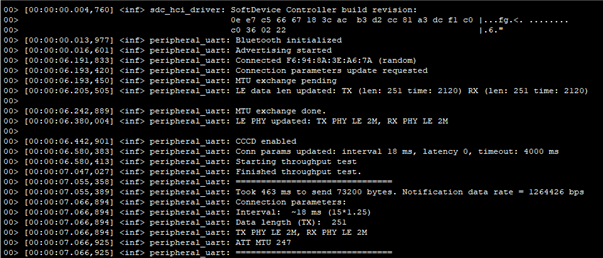

The application requests connection parameters update 300ms after the connection is established. After the CCCD is enabled and the connection parameter is updated it will start transmitting notifications on the NUS TX characteristic (calling bt_nus_send()).

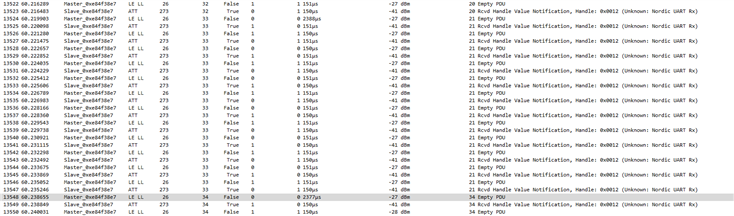

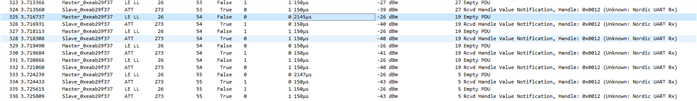

The following sniffer trace shows the communication with a 18.75ms connection interval (2Mbps, 251 bytes DLE, 247 byte ATT MTU). We had 12 notifications (243 bytes payload each) sent in a single connection interval. The throughput was 1264 kbps (2Mbps PHY).

Tested with SDK v2.5.2 :

3. Optimize the connection for low power consumption

(TBD)

We will provide the current measurements on common use cases with different connection parameters configurations. Basically to achieve low power consumption, what you should do is to keep the connection interval at the beginning short to finish service discovery as quick as possible. After that you can switch to a longer interval. If you need to transmit a large amount of data you can switch to shorter connection interval and switch back to longer connection interval after that.

Further reading

1. Accessory Design Guidelines for Apple Devices. At chapter 40 you can find the recommended connection parameters that work best with Apple device.

Top Comments