Hello.

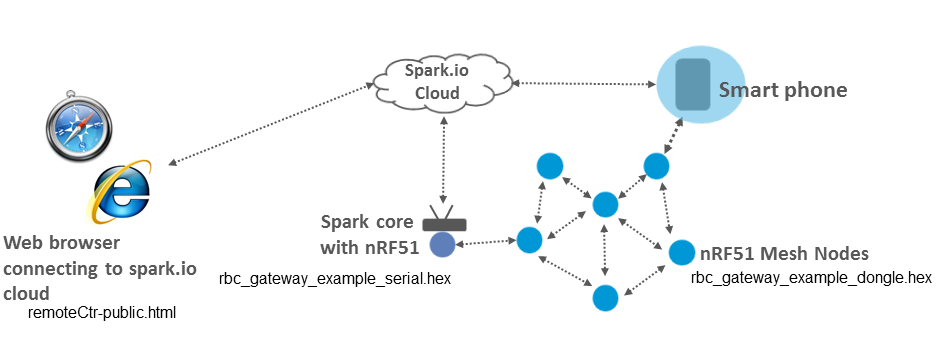

I was spending the last few days to implement an interface to access the BLE mesh via the Internet. The mesh consists of devices with LEDs, which then could be turned on and off in my browser. The entry point for my local devices is a Particle Core, which is accessible from the particle.io cloud service via a web browser. That's a nice feature to control all kinds of devices easily from a website.

Setup

The used software depends on if you want to use the softdevice S110 version 7 or 8.

Setup with S110 v7

We originally used the following devices and software:

- nRF51-Dongle(PCA10000) flashed with rbc_gateway_example_dongle.hex

- nRF51-DK(PCA10001) flashed with rbc_gateway_example_serial.hex

- nRF51-Dongle and nRF51-DK both have softdevice S110 v7.2

- Particle-Core or Photon or Electron programmed with the ble-mesh-cloud.ino file found in the nRF8001-ble-uart-spark-io library available in Paricles web IDE or in the project on github.

- remoteCtr-public.html to control the application via your browser

The hex files for the nRF51 and the dongle were both compiled from the nrf51-ble-bcast-mesh.

Setup with S110 v8

Now you can also use softdevice S110 v8.0 and SDK 8. Check the branch SDK-8-support of the github project nrf51-ble-bcast-mesh for the sources and use the latest release (v0.6.10-SD8) for the new hex files for the development kit and the dongle. What you need is:

- nRF51-Dongle(PCA10031) flashed with rbc_gatt_BOARD_PCA10031.hex

- nRF51-DK(PCA10028) flashed with rbc_gatt_serial_BOARD_PCA10028.hex

- nRF51-Dongle and nRF51-DK both have softdevice S110 v8.0

- Particle-Core or Photon or Electron programmed with the ble-mesh-cloud.ino file found in the nRF8001-ble-uart-spark-io library available in Paricles web IDE or in the project on github.

- remoteCtr-public.html to control the application via your browser

(the last two bullet points are the same for softdevice v7 and v8)

The project contains a compile target "nRF51822 xxAA serial" for the nRF-DK used as the gateway and the target "nRF51822 xxAA" for the nRF51-Dongles used as mesh nodes. To build these hex files, use the directions in the readme file of the project and define the board which you want to use in the project file.

Now to the overall setup. We can send commands as HTTP requests through the html file. These commands go to the Particle cloud from where they are automatically redirected to our Particle Core. Communication from web-based cloud to BLE is done through a gateway, which can speak BLE to the one side and Wi-Fi to the other. It consists of a nRF51-DK board for the BLE part and a Particle Core for the Wi-Fi part. They are connected through a 5 wire SPI bus, similar to the SPI connection used to interface a Nordic nRF8001. (See section 7 of the nRF8001 specification.) The Mesh commands supported are explained at https://github.com/NordicSemiconductor/nRF51-ble-bcast-mesh/blob/master/application_controller/serial_interface/README.adoc

The hex files for the nRF51 contain SPI and BLE mesh drivers that allow it to receive commands for the mesh (e.g. write and read handle values) and to execute them.

The Particle device should, after some initialization ritual, connect automatically to the local Wi-Fi and can be programmed over the spark.io web IDE.

To connect the Particle and the nRF51-DK you can use a nRF5x Adapter Shield or connect them through wires. The Particle board also powers the nRF, so plug-in the USB connector only to the Particle.io board.

If you connect them with wires, connect the following lines:

Line: Particle -> nRF

Power: 3V3-> VDD

Ground: GND -> GND

Reset: D2 -> RESET

REQN: A2 -> P0.24

RDYN: D5 -> P0.20

SCK: A3 -> P0.29

MISO: A4 -> P0.28

MOSI: A5 -> P0.25

Bridge the solder bridge SB17 on the nRF51-DK

You might have noticed the REQN and RDYN lines. REQN is basicly the slave select while RDYN is used to inidcate the master that the slave is ready for transmission.

We can use many nRF51-Dongles or nRF51-DKs forming a BLE-mesh (The nRF51-DKs do not have RGB LEDs). nRF51-Dongles have two colored LEDs. The state of each one is given by a mesh handle, which can be read and written over the interface. The nRF51-Dongles together with the nRF51-DK form a mesh at BTLE channel 38 with the access address oxa541a68f but due to endianness we will have to write it the other way around in our application later. The access address and channel can be changed to fit your application needs.

Programming the Particle

Programming the Particle can be done in a browser using Particles web IDE. First you need to go through this tutorial to get your spark core conected to the Wi-Fi and associated with your account. Now write down the Particle device ID which you need later. Once it is done you can load a small example code like the "Blink an LED" app to the spark to check if all is working correctly.

We then can create the app for the device. We add the library "nrf8001-ble-uart-spark-io" with the needed drivers and the example sketch file by clicking "Libraries" -> "nrf8001-ble-uart-spark-io" -> "ble-mesh-cloud.ino" -> "use this example". The program can then be flashed to the device.

A Closer Look to the .ino File

The main programming logic is written in the sketch file. Let's go through it step by step:

#include "nrf8001-ble-uart-spark-io/lib_aci.h"

#include "nrf8001-ble-uart-spark-io/boards.h"

#include "nrf8001-ble-uart-spark-io/rbc_mesh_interface.h"

#include "nrf8001-ble-uart-spark-io/serial_evt.h"

Here we include drivers for the SPI module and the interface for the communication.

enum state_t{

init,

ready,

waitingForEvent

};

int state = init;

We need a state to know if we are ready to recieve a new command from the cloud or if it would mess our initialization process or the communication with the SPI slave.

You noticed that the state is not a state_t but an int. That makes internally no difference but we want to read the state out with a HTTP GET request to be displayed later. Therefor lets use a standart data type.

int lastResponse;

Later on we want to send requests via SPI triggered by a HTTP POST request and save the answer from the nRF51-DK (which is the SPI slave) to this variable so it can be picked up by a HTTP GET request. We can not implement a function that sends the SPI command, waits for the answer and returns it since function calls in the Spark Core should not take longer than a certain time in order to let the core run some other functions wich are invisible to the user (Wi-Fi communication, I guess). OK, let's go on:

int set_val(String args){

if(state != ready){

return -1;

}

state = waitingForEvent;

uint8_t handle = args[0] - '0';

uint8_t value = args[1] - '0';

return rbc_mesh_value_set(handle, &value, (uint8_t) 1);

}

This will be the callback function for whenever a HTTP POST request to set a handle arrives. It receives a string consisting of two digits with the handle and its new value. That data is enqueued to send via SPI to the slave. Also notice that we set the state to waitForEvent, so no new requests will be handled before the slave acknowledges a successfull transmission.

int get_val_req(String args){

if(state != ready){

return -1;

}

state = waitingForEvent;

uint8_t handle = args[0] - '0';

return rbc_mesh_value_get(handle);

}

This one is the callback function to get a handle value. Like the other callback function it just receives its string and enqueues the message to be send via SPI. This is actually the point where we can not send the answer directly but it can be picked up later, after the SPI slave (nRF51-DK) responded with the data.

Next we start the initalization routine:

aci_pins_t pins;

void setup(void){

Serial.begin(9600);

pins.board_name = BOARD_DEFAULT;

pins.reqn_pin = A2;

pins.rdyn_pin = D5;

pins.mosi_pin = A5;

pins.miso_pin = A4;

pins.sck_pin = A3;

pins.spi_clock_divider = SPI_CLOCK_DIV8; //2 MHz

pins.reset_pin = D2;

pins.active_pin = NRF_UNUSED;

pins.optional_chip_sel_pin = NRF_UNUSED;

pins.interface_is_interrupt = false; //Polling is used

pins.interrupt_number = NRF_UNUSED;

rbc_mesh_hw_init(&pins);

We start the serial connection (in case you want some printlns), configure our pins and set a clock divider to set the the SPI clock to 2 MHz (We could get down to divider _DIV64 which would give us 256 KHz). The hardware is initialized with this configuration. Next the software configuration is done as below:

Spark.function("set_val", set_val);

Spark.function("get_val_req", get_val_req);

Spark.variable("state", &state, INT);

Spark.variable("lastResponse", &lastResponse, INT);

return;

}

We register the two functions set_val and get_val_req as callback functions for POST requests and the variables state and lastResponse as visible for GET requests and that's it. Basic initialization is done. Next, we need to initialize the connection to the SPI slave (nRF51-DK) from the SPI master(Spark Core) and to provide it with the basic data about the mesh. This can not happen in one long function call so we divide it into small parts and execute them in the main loop. A state machine is responsible for executing the next step:

int initState = 0;

bool newMessage = false;

void initConnectionSlowly(){

uint32_t accAddr = 0xA541A68F;

switch(initState) {

We need an addtional state in which is counted how far we came in the initialization process and a flag newMessage which is set in the main loop to see if the slave reacted to the last command, so that we can proceed.

case 0: //Initial State

rbc_mesh_init(accAddr, (uint8_t) 38, (uint8_t) 2, (uint32_t) 0x64000000);

initState++;

break;

In the beginning we just send the data concerning the connection to the mesh: the access-adress, the channel, the amount of handles and the last argument is the advertising interval in milliseconds. This is supposed to be a 100, but both connected devices have different endianness, so it must be written in the way that the nRF51-DK understands it.

case 1: // Wait for Command Response for Init

if(newMessage){

rbc_mesh_value_enable((uint8_t) 1);

initState++;

}

break;

case 2: // Wait for Command Resposne value enable for mesh handle 1

if(newMessage){

rbc_mesh_value_enable((uint8_t) 2);

initState++;

}

break;

The states 1 and 2 share a very similar structure: We pass until a message from the slave arrives to tell us that the last request was handled. Then we send the command to enable publishing of a handle.

case 3: // Wait for Command Resposne value enable for mesh handle 2

if(newMessage){

state = ready;

Serial.println("init done");

}

}

}

State 3 is just there to wait for the last acknowledge of the slave and then the initialization is done, we move to the state ready.

One last bit and we are done:

void loop() {

if(state == init){

initConnectionSlowly();

}

//Process any ACI commands or events

serial_evt_t evnt;

newMessage = rbc_mesh_evt_get(&evnt);

if(state == waitingForEvent && newMessage

&& evnt.opcode == SERIAL_EVT_OPCODE_CMD_RSP){

state = ready;

lastResponse = evnt.params.cmd_rsp.response.val_get.data[0];

}

}

That's the main loop. We check if we need to execute the initialization and then we check for new events. If there is a new message with the opcode indicating that it is a command response and we were waiting for one (what happenes shortly after a callback function is triggered), we write the response into the buffer. The response comes in form of a serial_evnt message from the SPI-slave. Check in the library serial_evnt.h to see all possible data fields in the message.

Accessing the Mesh

We got the Particle core working but now we want to interact with it in form of sending commands. All interaction is done through http-post and -get-requests for which we already registered the handlers. To call them, Spark offers a webservice at https://api.particle.io to which we can send our requests. From there they will be redirected to our spark device and executed there. We then recieve a verbose response which contains a field called "result" or "return value".

Our Particle device is identified by an ID, which you already should have noticed while programming. You can check it at the Particle build website through clicking the "cores" button and then the little arrow next to your core. We send that ID in the URL for our HTTP-request with that pattern:

https://api.particle.io/v1/devices/[device_ID]/[function/variable_name]

So suppose our core has the ID 0123456 and we want to call the function or read the variable xyz, then our URL looks like that:

https://api.particle.io/v1/devices/0123456/xyz

The difference between a function call and a variable read is whether we access that URL via a POST or a GET request.

In addition to the device ID we need an access token to identify the user. You can check your token at the spark build website through clicking on the "settings" button. We will have to send the access token along with the rest of our request as a parameter.

With that we have enough stuff together to send a HTTP GET request to our device to read a variable. We registered the variable "state" to see if our setup is initialized. So lets check it. Suppose we have the access token 98765, we build our URL according to that example:

https://api.particle.io/v1/devices/123456/state?access_token=98765

Sending GET requests is what browsers do all day, so we just enter the URL like a website and get a nice result which hopefully shows us a field called "result" with the value 1, which means the device is ready for more requests.

Calling a function is a bit more complicated since we need to send the arguments and the call is done over HTTP POST requests. One way to do it is via html forms, like in this file:

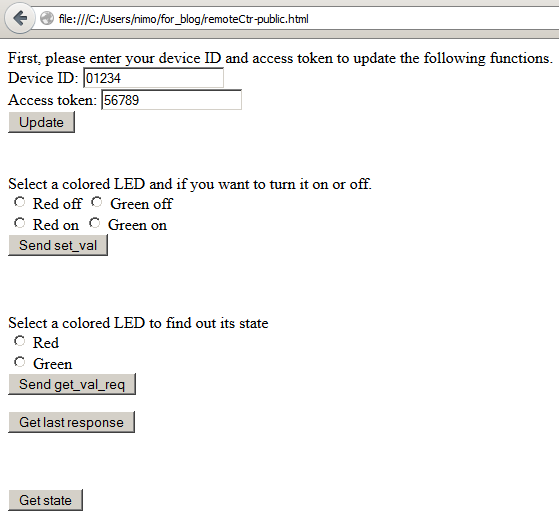

remoteCtr-public.html

It can be used as a remote control through your browser to set and read the states of the LEDs. A little preprocessing is needed since the access token and the device ID are needed quite often and my ones won't help you.

So you first have to insert your device ID and your access code and then press on the update button. Once that is done you can control your application by checking the initalization status with one button, activating/deactivating LEDs with another one and reading their status (for this you need the two buttons "Send get_val_req" followed by "get last response").

All right, that's it. Try it out and if you have any questions use the devzone ( https://devzone.nordicsemi.com/questions/) for questions.

-

esb

-

Cancel

-

Vote Up

0

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

Comment-

esb

-

Cancel

-

Vote Up

0

Vote Down

-

-

Sign in to reply

-

More

-

Cancel

Children