We're trying to reduce noise inside a device using the NRF9160 and the last noisy device we have is a 6V -> 3.3V switching power supply for the nrf.

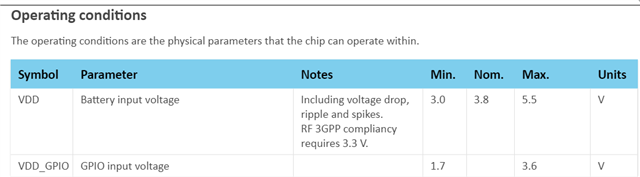

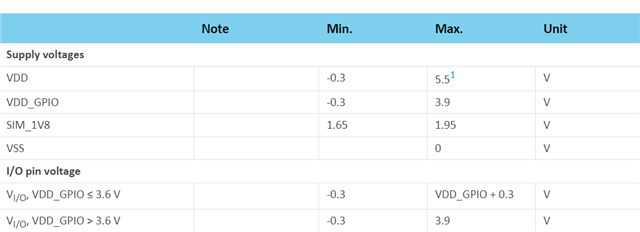

One idea on the table now is to replace the switching supply with a linear one, but dropping from 6V to 3.3V using a linear reg would increase battery consumption too much. However, powering the nrf from 5V would make it feasible - at least in theory.

All our peripherals are either 5V tolerant or already 5V and using level converters from 3v3 to 5V, so this is not an issue.

In terms of usage, it's an IoT device sleeping a lot, with regular (every 200ms up to 1s) wakeups to read some sensors quickly. There is modem activity once in a while, as the cell tower demands.

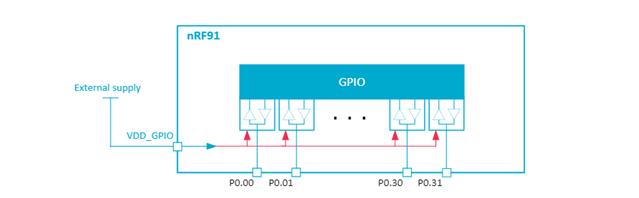

So the question is - is there any major difference in power consumption when running the nrf9160 from 5V vs 3.3V? I see that the sim is using 1.8V but that one is already generated internally I presume. Is there anything else we should be aware of when powering from 5V?

Thanks a lot for the support!