I want to send a continuos stream of sensor data over BLE, currently using the NUS GATT profile, connecting directly to a third-party device e.g. computer or phone.

The bandwidth required is about ~256kbit/s so in theory more than enough bandwidth available than the practical limit of around 1.4Mbit/s with 2M PHY that I so far observed with the throughput sample.

So far I have only been able to get a reliable stream going when heavily reducing the sensor sampling rate to 128-180Kbit/s but even then only in very specific combinations of MTU size and connection interval do I get a reliable transfer and without any clear reason as to why as both a larger and small MTU as well as longer and shorter connection intervals can cause the connection to get better or worse. I seem to sometimes hit magic numbers by trial and error that yield stable results.

So this has been very unintuitive so far as to how to tune these connection variables to get to a stable result and reasonable latency (<100ms).

Right now battery consumption is not the highest priority so it would be okay to use the most wasteful configuration as long as the bandwidth can be reached.

What is currently happening code wise is the sensor (dmic) reads into a memory slab. I pass the block pointer to the ble write thread and let the dmic continue to sample and grab the next block in the memory slab. For debugging I currently log the memory slabs block utilization.

The ble write thread then sends out the data in one or more messages depending on MTU size and memory block size.

Sometimes things go badly immediately and the memory blocks get used up immediately but sometimes its stable for a little bit and then suddenly goes sideways and the connection can't keep up with the sensor stream and fills up the slab.

How do I go about debugging this and are there deeper guides for this scenario in terms of PHY settings available to me.

I only found this on the topic so far: Building a Bluetooth application on nRF Connect SDK - Part 3 Optimizing the connection

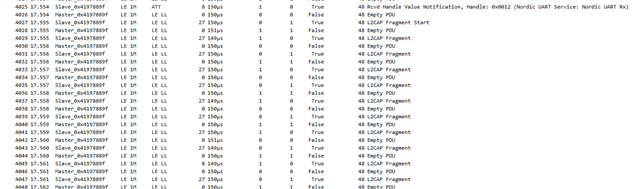

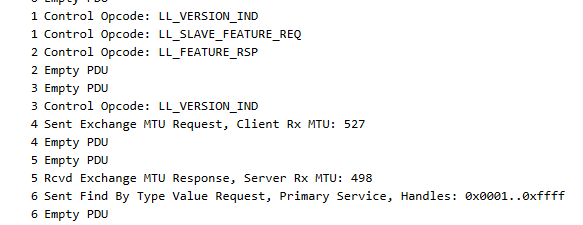

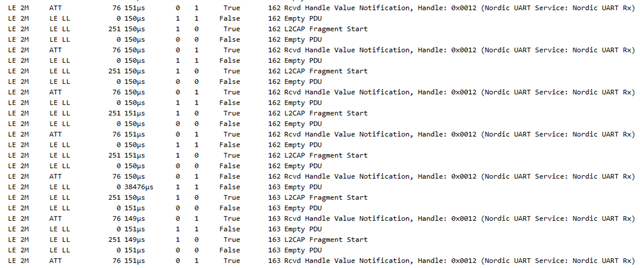

I have looked at what comes through the air with the BLE sniffer but could not spot anything obvious like too many retries.

Would a custom GATT profile be better suited? The NUS service seemed like a useful basis for this application but maybe I am overlooking a constraint here.