I would like to implement ble mesh direct forwarding feature in my code but i am not finding zephyr library for that. Is there any possibility to get link for zephyr direct forwarding feature code or library?

I would like to implement ble mesh direct forwarding feature in my code but i am not finding zephyr library for that. Is there any possibility to get link for zephyr direct forwarding feature code or library?

Hi,

The current implementation does not support Bluetooth Mesh Directed Forwarding (MDF). See Subnet Bridge and Directed Forwarding.

Regards,

Amanda H.

This feature is really important to us to choose BLE mesh over zigbee and thread for our upcoming project. I would like to know when can we expect the support for Bluetooth Mesh Directed Forwarding (MDF) in Zephyr?

can you please elaborate the use case? numeber of nodes, topology, gateway vs no gateway, vendor model vs standard model, payloads, etc?

We see no real impact of MDF in some scenario. MDF provides an edge only in some specific use cases.

Thanks

BR

LA

We are a leading manufacturer of EC fans integrated into energy-efficient HVAC systems. In cleanroom environments, we may deploy up to 1,000 EC fans connected via a mesh topology with a central gateway. Our implementation currently uses a vendor-specific model with a maximum payload of 80 bytes, with potential plans to scale up to 256 bytes. We believe that the MDF (Mesh Directed Forwarding) feature will significantly help in reducing network traffic in such high-density deployments.

Ok. Based on our estimations, MDF can provide some benefits (in some topologies) only if max-hops between sender and receiver are more than 5/6 hops. Otherwise MDF is not useful, it is more bloat than help. Note: MDF is not currently supported on our SDK. Our suggestion is to try to explore other techniques that could help to limit/isolate the traffic. example the Bluetooth® Mesh Subnet Bridging, which is already supported in nRF Connect SDK: https://www.bluetooth.com/mesh-subnet-bridging/

We are currently in the initial analysis phase, and it may take another 2 to 3 years before we are ready to launch the complete product in the field. During this development timeline, is it possible to receive MDF Feature support on your SDK?

Additionally, we would appreciate your guidance on the practical scalability of the network. Specifically, how many nodes can be reliably supported in a single network with a gateway? For instance, in the case of managing 1000 fan nodes, how should we plan the number of subnetworks required to ensure efficient handling of network traffic?

1000 nodes is quite possible with mesh but depends also on topology. Two things that will help getting performance right:

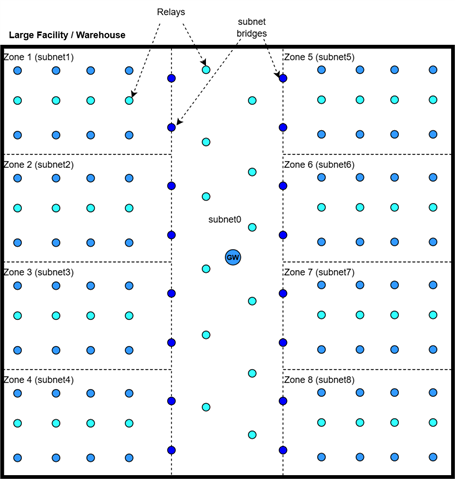

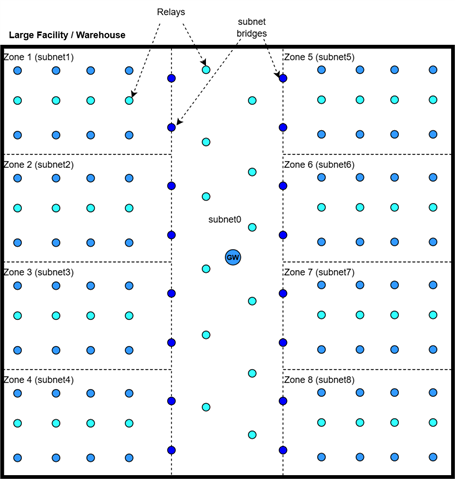

A) reduce the number of relay nodes: you probably don't need all nodes to be relay. In our testing with 99 nodes, we cover two scenario: (a) All relays (b) Corridor nodes as relays. This will depend on how far nodes are from each other. Large scale Bluetooth mesh testing

B) if topology allows, you can take advantage of subnet to reduce/limit traffic:

GW sends a message to destination N => Message propagates across the network and if it is sent to the bridged address, the bridge which knows that it had been configured to handle message destined for the address N, will then convert the network key and re-broadcast the message. The relays in that subnet then pick up this message and start relaying it.

When destination N wants to send message to GW => Message propagates across the network and if it is sent to the bridged address, the bridge which knows that it had been configured to handle message destined for the address GW will then convert the network key and re-broadcast the message. The relays in that subnet then pick up this message and start relaying it.

So, whole process happens transparently. Only during the network setup, the network configurator needs to configure subnet bridges and add appropriate SRC-DST pairs in the bridging table of the bridge.

For the nodes, I would suggest to use nRF54L10 or nRF54L15, with dual bank dfu and using max Tx power. However final choice depends on many aspects outside Nordic control, including your application code/logics.

here some extra important aspects for practical scalability:

Overall, Nordic can support you along the way so you end up with the right implementation. Please open tickets (one topic each to keep the right focus) with your specific questions as they come up.

For roadmaps, please contact the local sales manager.

1000 nodes is quite possible with mesh but depends also on topology. Two things that will help getting performance right:

A) reduce the number of relay nodes: you probably don't need all nodes to be relay. In our testing with 99 nodes, we cover two scenario: (a) All relays (b) Corridor nodes as relays. This will depend on how far nodes are from each other. Large scale Bluetooth mesh testing

B) if topology allows, you can take advantage of subnet to reduce/limit traffic:

GW sends a message to destination N => Message propagates across the network and if it is sent to the bridged address, the bridge which knows that it had been configured to handle message destined for the address N, will then convert the network key and re-broadcast the message. The relays in that subnet then pick up this message and start relaying it.

When destination N wants to send message to GW => Message propagates across the network and if it is sent to the bridged address, the bridge which knows that it had been configured to handle message destined for the address GW will then convert the network key and re-broadcast the message. The relays in that subnet then pick up this message and start relaying it.

So, whole process happens transparently. Only during the network setup, the network configurator needs to configure subnet bridges and add appropriate SRC-DST pairs in the bridging table of the bridge.

For the nodes, I would suggest to use nRF54L10 or nRF54L15, with dual bank dfu and using max Tx power. However final choice depends on many aspects outside Nordic control, including your application code/logics.

here some extra important aspects for practical scalability:

Overall, Nordic can support you along the way so you end up with the right implementation. Please open tickets (one topic each to keep the right focus) with your specific questions as they come up.

For roadmaps, please contact the local sales manager.