Hello,

I run the example given in SDK V15.0.0 to measure Vdd of nRF52-DK:

NRF_DRV_SAADC_DEFAULT_CHANNEL_CONFIG_SE(NRF_SAADC_INPUT_VDD);

I don't change the default param:

- resolution = 10 bits

- ref = 0.6V

- gain = 1/6

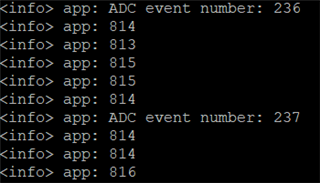

As seen in console, I obtain an average value of 815

which gives: 815* 0.6 / 1024 * 6 = 2.87 Volts which is right for the nRF52832.

The problem is that if I change the resolution (to 8 bits or 14 bits) then I keep finding the same value of 815 ... how do you explain that?

Thanks,

John.