Hi,

I am reading a voltage value from an analog sensor which changes values with 0.02V step. So, i need the accuracy up to 0.02V from ADC channels . But right now i am off by around 0.05V. Where I should get 2.94V I am receiving 2.89V and this changes the sensor value drastically. I am already using 14 bit resolution. How else can i increase the accuracy ?

I am using single ended with no over-sampling with a gain of 1/6, reference 0.6.

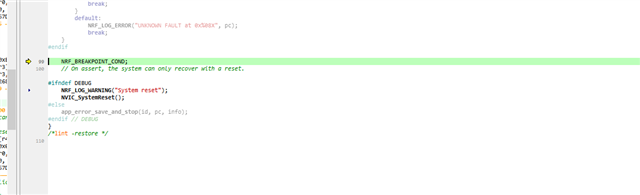

If I try to enable the over-sampling . I face an unusual behavior that the debugger goes in NO BREAKPOINT CONDITION.

Could there be a fault in my code? May be because of type casting , i am loosing accuracy ??

Please Suggest!!

void saadc_callback(nrf_drv_saadc_evt_t const *p_event) {

if (p_event->type == NRF_DRV_SAADC_EVT_DONE) {

ret_code_t err_code;

uint16_t adc_value[5];

float T, T1, T2, P1, P2;

uint8_t nus_to_send_array[2];

//err_code = nrf_drv_saadc_buffer_convert(p_event->data.done.p_buffer, SAADC_SAMPLES_IN_BUFFER);

//APP_ERROR_CHECK(err_code);

int i;

NRF_LOG_INFO("ADC event number: %d", (int)m_adc_evt_counter);

for (i = 0; i < SAADC_SAMPLES_IN_BUFFER; i++) {

// printf("%d\r\n",p_event->data.done.p_buffer[i]);

adc_value[i] = p_event->data.done.p_buffer[i];

}

P2 = (adc_value[4]) / 4551.11; // CONVERT ADC VALUE TO VOLTAGE

}