Hi,

We need to save 3 strings to flash using NVS. Based on the code sample in zephyr\samples\subsys\nvs we set the initialization and then write and read these data.

To have successful read back we need to make some dummy write to NVS before, else the reading doesn't correspond to the previous written value.

We have done the following observation:

- doing read/write of the NVS before enabling Bluetooth works fine

- doing read/write after enabling Bluetooth retrieves the very first data instead of the last written (and some more strange behaviour)

- the Bluetooth stack is doing a second nvs_init() with larger sector number to fs structure

Our question are the following

- is there more documentation on NVS than the basic developer source ?

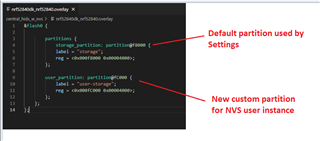

- is there any precautions to use "custom" access to NVS at the same time as Bluetooth stack ?

- should we split the storage area for each uses or ID's should be fully managed by Bluetooth stack without any problems ?

We initiate fs with DTS flash controller definition (ex: nRF52833 -> storage begin at 0x7a000)

Config is NCS 1.5.0 or 1.6.0, nRF52840DK

Best regards,

Romain