Hi everyone,

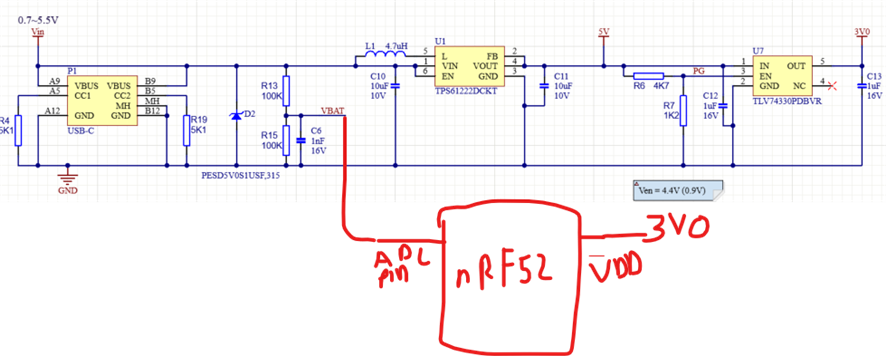

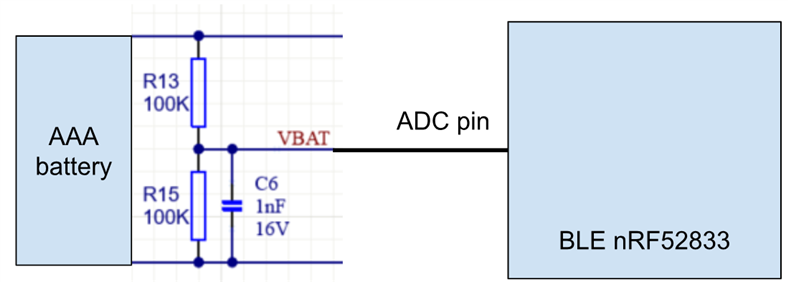

We're reading BAT level by reading data from ADC pin and the setup diagram is shown below.

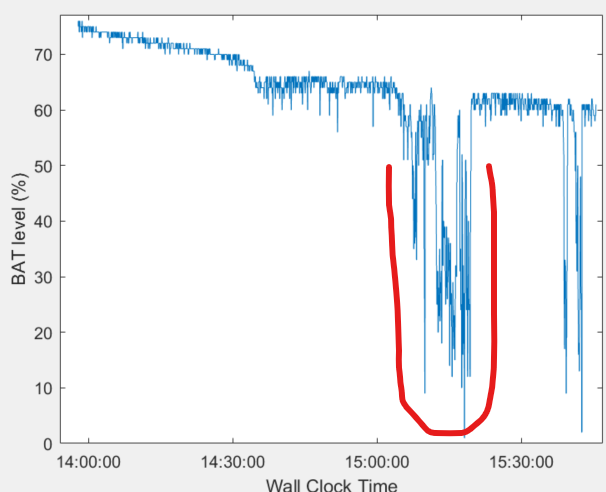

We tested our device at hospital, and we observed significant fluctuation highlighted in red regarding its BAT level. For example, it went from 65% to 20%, then jumped to 60% again.

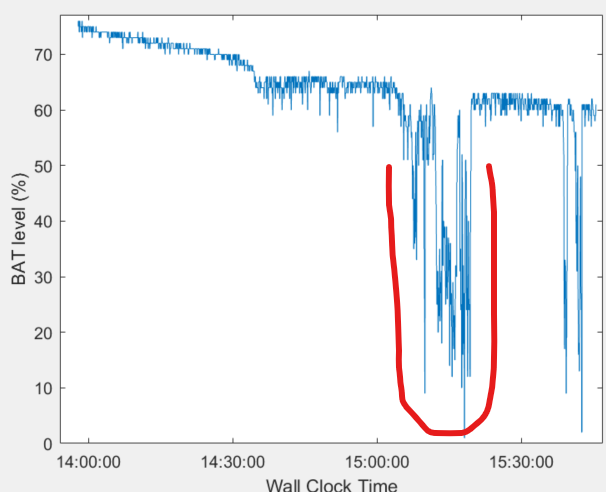

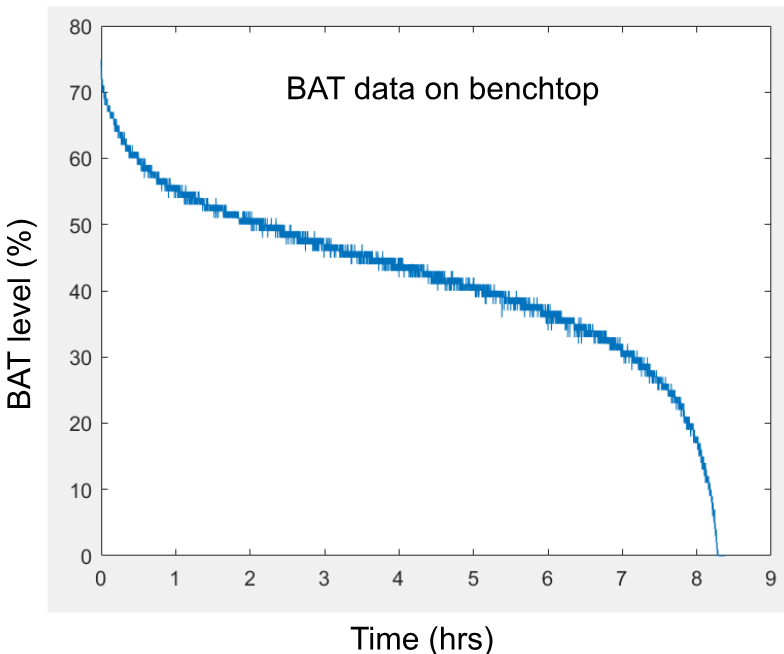

We then took the device and battery back to the office and tried to reproduce the issue on benchtop. However, we couldn't reproduce it. In contrast, the following is what we got, and it worked as expected.

Our hypothesis is that it could be due to the impedance mismatch between ADC input pin and the output of voltage divider. However, based on our calculation, the input impedance of the voltage divider is equivalent to R13//R15 (50kOhm) while the input impedance of ADC pin is more than 1MOhm. This would not cause the impedance mismatch.

I greatly appreciate if anyone has any suggestions for this issue. Thanks.